3. Exploratory data analysis of a single glacier

Introduction

Overview

In the previous notebook, we walked through initial steps to read and organize a large raster dataset, and to understand it in the context of spatial areas of interest represented by vector data.

The previous notebook mainly focused on high-level processing operations:

reading a multi-dimensional dataset,

re-organizing along a given dimension,

reducing a large raster object to smaller areas of interest represented by vector data, and

strategies for completing these steps with larger-than-memory datasets.

In this notebook, we will continue performing initial data inspection and exploratory analysis but this time, focused on velocity data clipped to an individual glacier. We demonstrate Xarray functionality for common computations and visualizations.

Outline

Load raster data into memory and visualize with vector data

Examine data coverage

Break down by sensor

Combine sensor-specific subset

B. Examine velocity variability

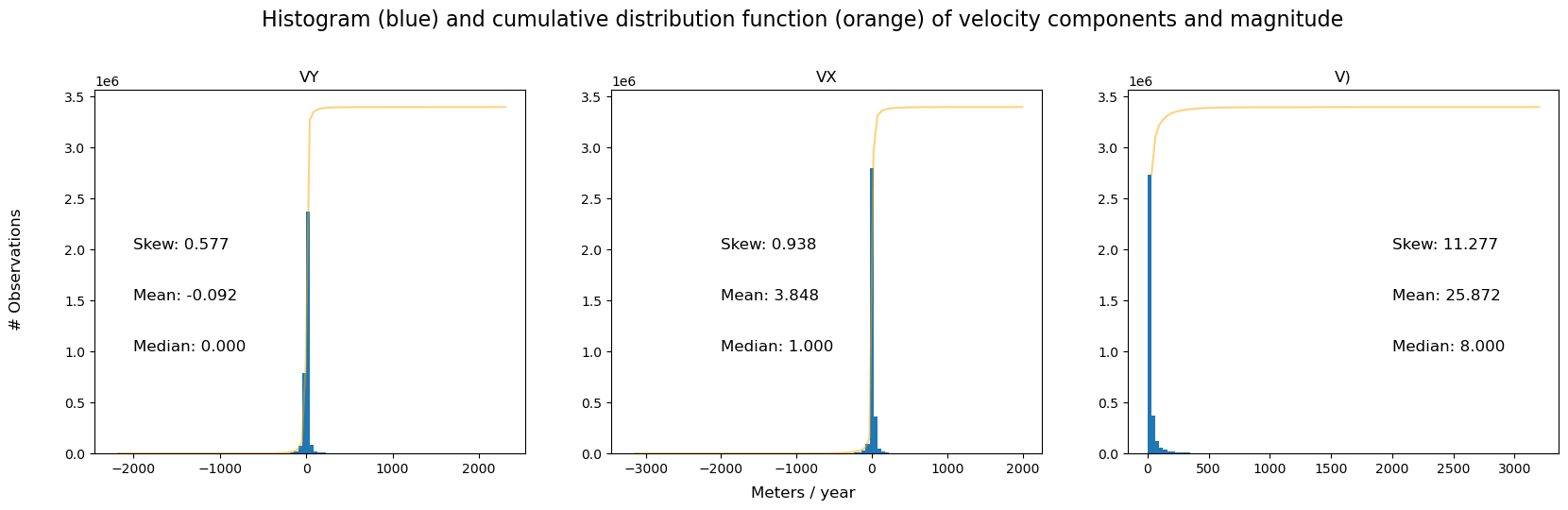

Histograms and summary statistics

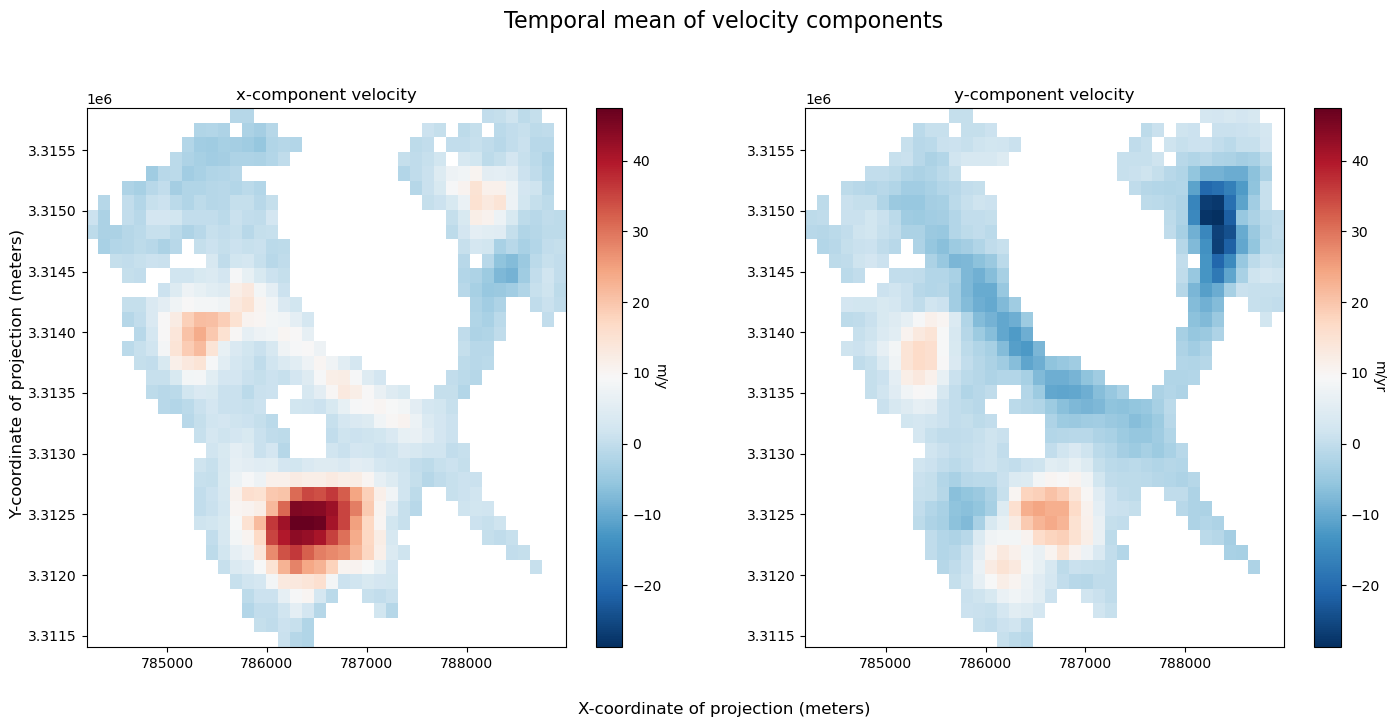

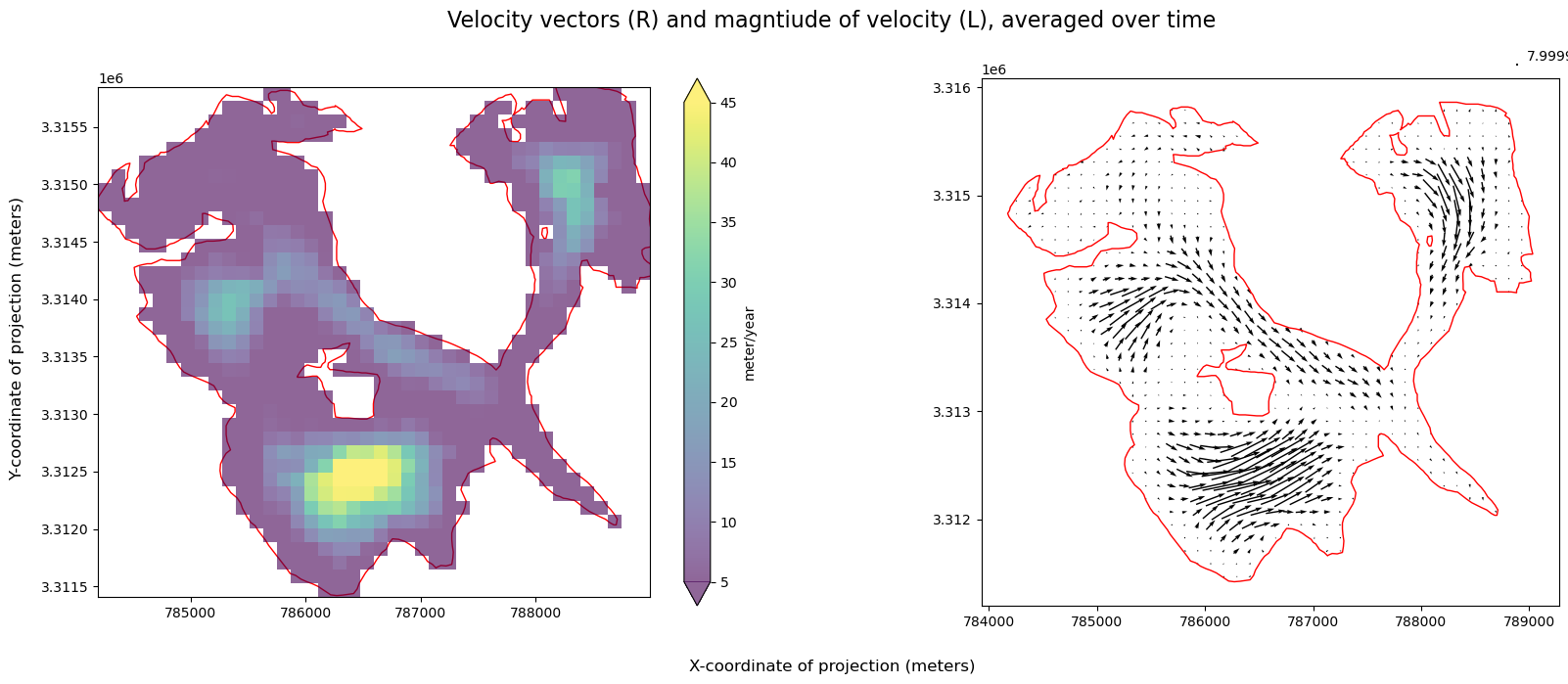

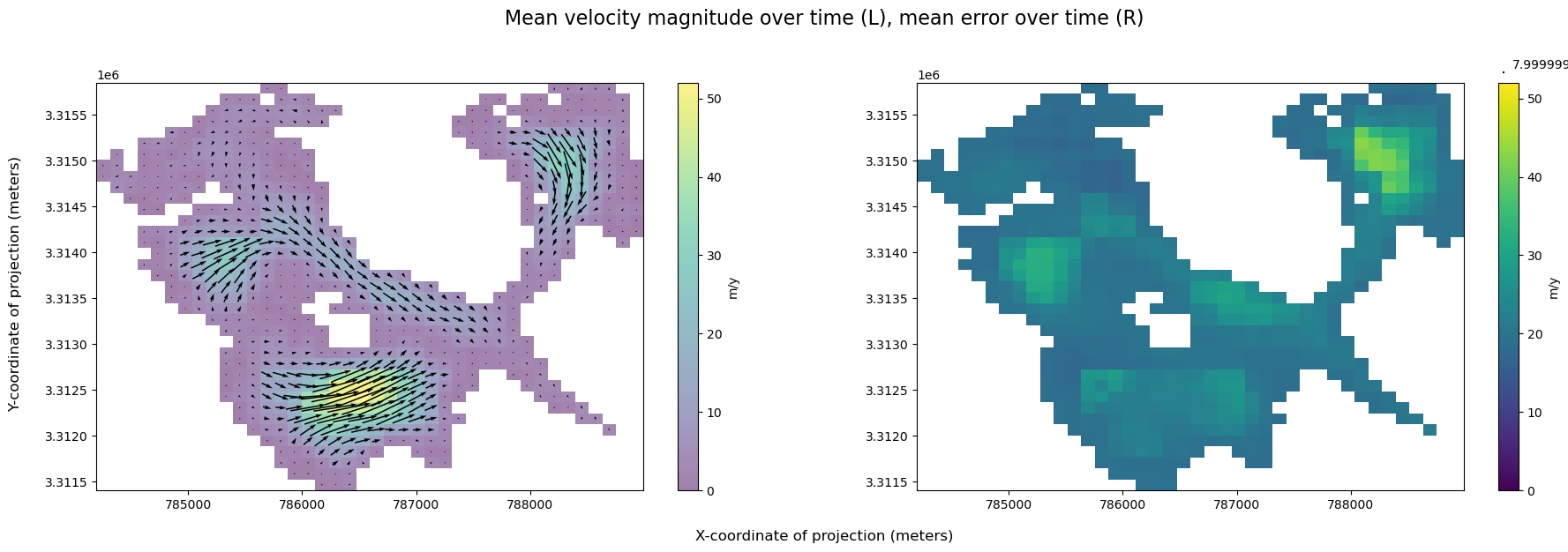

Spatial velocity variability

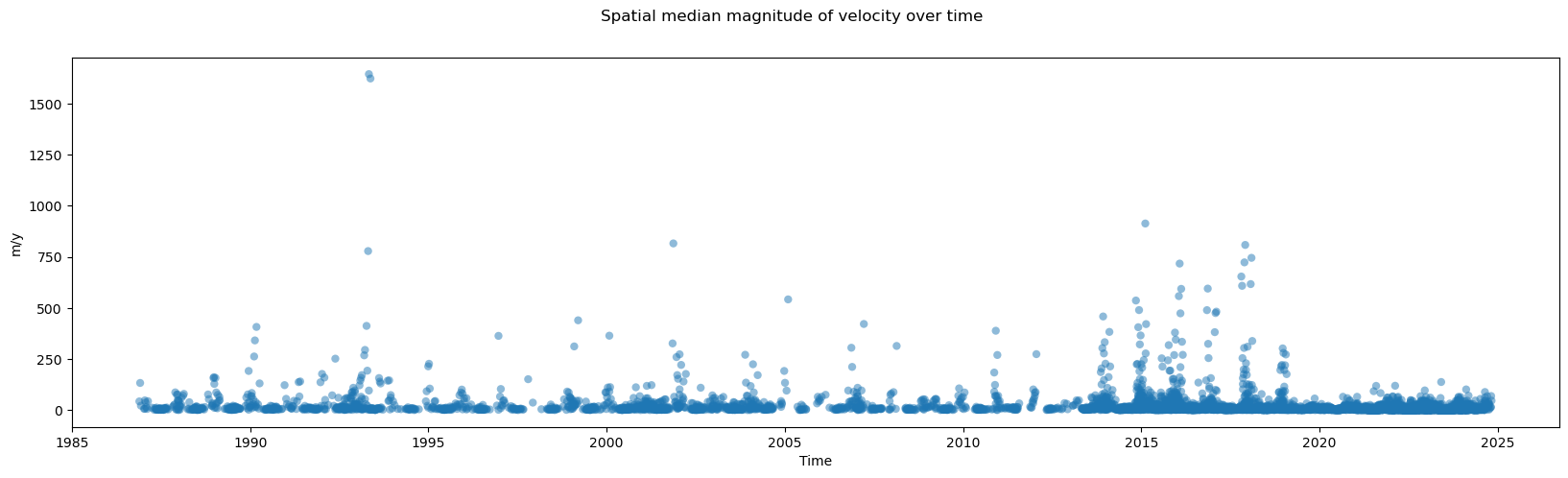

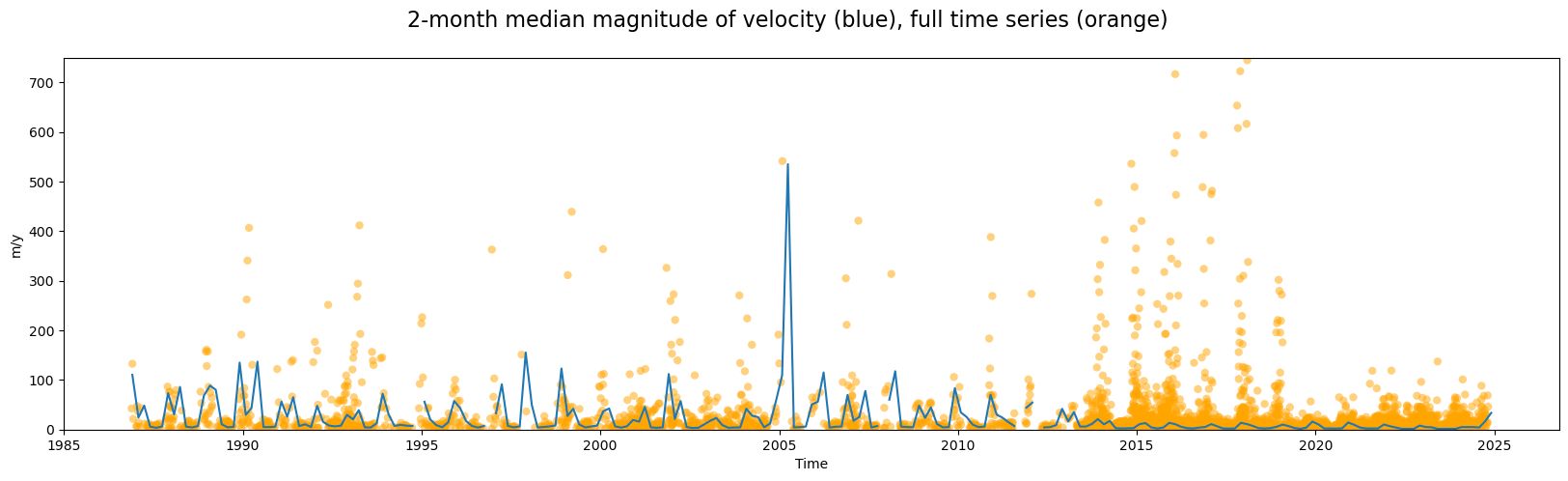

Temporal velocity variability

Temporal resampling

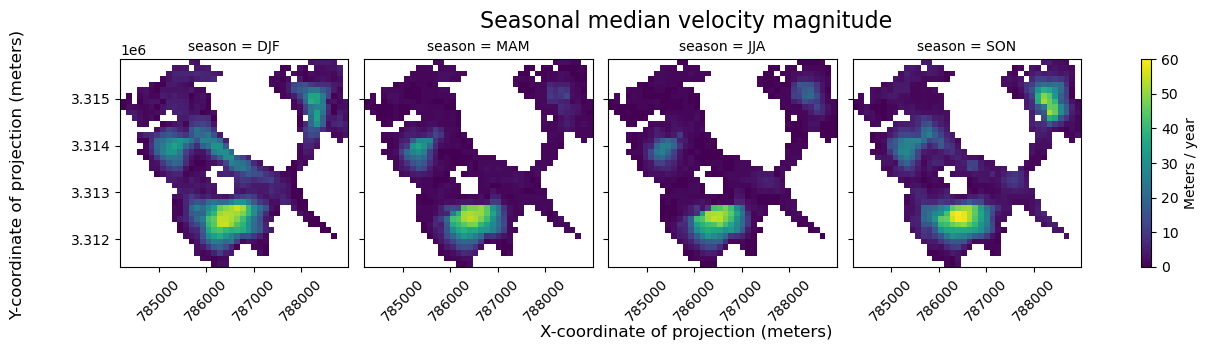

Grouped analysis by season

Learning goals

Concepts

Examining metadata, interpreting physical observable in the contxt of available metadata

Techniques

Using matplotlib to visualize raster and vector data with satellite imagery basemaps

Expand the next cell to see specific packages used in this notebook and relevant system and version information.

Show code cell source Hide code cell source

%xmode minimal

import contextily as cx

import geopandas as gpd

import matplotlib.pyplot as plt

import numpy as np

import rioxarray as rio

import scipy.stats

import warnings

import xarray as xr

warnings.simplefilter(action='ignore', category=FutureWarning)

Show code cell output Hide code cell output

Exception reporting mode: Minimal

A. Data exploration#

1) Load raster data into memory and visualize with vector data#

single_glacier_raster = xr.open_zarr('../data/single_glacier_itslive.zarr')

single_glacier_vector = gpd.read_file('../data/single_glacier_vec.json')

single_glacier_raster.nbytes/ 1e9

3.326255548

The above code cells show us that this dataset contains observations from Sentinel 1 & 2 and Landsat 4,5,6,7,8 & 9 satellite sensors. The dataset is 3.3 GB.

Next, we want to perform computations that require us to load this object into memory. To do this, we use the dask .compute() method, which turns a ‘lazy’ object into an in-memory object. If you try to run compute on too large of an object, your computer may run out of RAM and the kernel being used in this python session will die (if this happens, click ‘restart kernel’ from the kernel drop down menu above).

single_glacier_raster = single_glacier_raster.compute()

Now, if you expand the data object to look at the variables, you will see that they no long hold dask.array objects.

single_glacier_raster

<xarray.Dataset> Size: 3GB

Dimensions: (mid_date: 47892, y: 37, x: 40)

Coordinates:

* mid_date (mid_date) datetime64[ns] 383kB 1986-09-11T03...

spatial_ref int64 8B 0

* x (x) float64 320B 7.843e+05 ... 7.889e+05

* y (y) float64 296B 3.316e+06 ... 3.311e+06

Data variables: (12/60)

M11 (mid_date, y, x) float32 284MB nan nan ... nan

M11_dr_to_vr_factor (mid_date) float32 192kB nan nan nan ... nan nan

M12 (mid_date, y, x) float32 284MB nan nan ... nan

M12_dr_to_vr_factor (mid_date) float32 192kB nan nan nan ... nan nan

acquisition_date_img1 (mid_date) datetime64[ns] 383kB 1986-07-25T03...

acquisition_date_img2 (mid_date) datetime64[ns] 383kB 1986-10-29T03...

... ...

vy_error_modeled (mid_date) float32 192kB 97.0 64.6 ... 930.7

vy_error_slow (mid_date) float32 192kB 30.3 18.9 ... 61.7 50.1

vy_error_stationary (mid_date) float32 192kB 30.2 18.9 ... 61.6 50.1

vy_stable_shift (mid_date) float32 192kB 4.1 -6.9 ... 91.2 91.2

vy_stable_shift_slow (mid_date) float32 192kB 4.2 -6.9 ... 91.2 91.2

vy_stable_shift_stationary (mid_date) float32 192kB 4.1 -6.9 ... 91.2 91.2

Attributes: (12/19)

Conventions: CF-1.8

GDAL_AREA_OR_POINT: Area

author: ITS_LIVE, a NASA MEaSUREs project (its-live.j...

autoRIFT_parameter_file: http://its-live-data.s3.amazonaws.com/autorif...

datacube_software_version: 1.0

date_created: 25-Sep-2023 22:00:23

... ...

s3: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

skipped_granules: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

time_standard_img1: UTC

time_standard_img2: UTC

title: ITS_LIVE datacube of image pair velocities

url: https://its-live-data.s3.amazonaws.com/datacu...- mid_date: 47892

- y: 37

- x: 40

- mid_date(mid_date)datetime64[ns]1986-09-11T03:31:15.003252992 .....

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

array(['1986-09-11T03:31:15.003252992', '1986-10-05T03:31:06.144750016', '1986-10-21T03:31:34.493249984', ..., '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000'], dtype='datetime64[ns]') - spatial_ref()int640

- GeoTransform :

- 784192.5 120.0 0.0 3315847.5 0.0 -120.0

array(0)

- x(x)float647.843e+05 7.844e+05 ... 7.889e+05

- axis :

- X

- description :

- x coordinate of projection

- long_name :

- x coordinate of projection

- standard_name :

- projection_x_coordinate

- units :

- metre

array([784252.5, 784372.5, 784492.5, 784612.5, 784732.5, 784852.5, 784972.5, 785092.5, 785212.5, 785332.5, 785452.5, 785572.5, 785692.5, 785812.5, 785932.5, 786052.5, 786172.5, 786292.5, 786412.5, 786532.5, 786652.5, 786772.5, 786892.5, 787012.5, 787132.5, 787252.5, 787372.5, 787492.5, 787612.5, 787732.5, 787852.5, 787972.5, 788092.5, 788212.5, 788332.5, 788452.5, 788572.5, 788692.5, 788812.5, 788932.5]) - y(y)float643.316e+06 3.316e+06 ... 3.311e+06

- axis :

- Y

- description :

- y coordinate of projection

- long_name :

- y coordinate of projection

- standard_name :

- projection_y_coordinate

- units :

- metre

array([3315787.5, 3315667.5, 3315547.5, 3315427.5, 3315307.5, 3315187.5, 3315067.5, 3314947.5, 3314827.5, 3314707.5, 3314587.5, 3314467.5, 3314347.5, 3314227.5, 3314107.5, 3313987.5, 3313867.5, 3313747.5, 3313627.5, 3313507.5, 3313387.5, 3313267.5, 3313147.5, 3313027.5, 3312907.5, 3312787.5, 3312667.5, 3312547.5, 3312427.5, 3312307.5, 3312187.5, 3312067.5, 3311947.5, 3311827.5, 3311707.5, 3311587.5, 3311467.5])

- M11(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- conversion matrix element (1st row, 1st column) that can be multiplied with vx to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- grid_mapping :

- mapping

- standard_name :

- conversion_matrix_element_11

- units :

- pixel/(meter/year)

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - M11_dr_to_vr_factor(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M11_dr_to_vr_factor

- units :

- meter/(year*pixel)

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- M12(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- conversion matrix element (1st row, 2nd column) that can be multiplied with vy to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- grid_mapping :

- mapping

- standard_name :

- conversion_matrix_element_12

- units :

- pixel/(meter/year)

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - M12_dr_to_vr_factor(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M12_dr_to_vr_factor

- units :

- meter/(year*pixel)

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- acquisition_date_img1(mid_date)datetime64[ns]1986-07-25T03:32:53.264025024 .....

- description :

- acquisition date and time of image 1

- standard_name :

- image1_acquition_date

array(['1986-07-25T03:32:53.264025024', '1986-07-25T03:32:53.264025024', '1986-07-25T03:32:53.264025024', ..., '2024-10-24T04:17:39.000000000', '2024-10-24T04:17:39.000000000', '2024-10-24T04:17:39.000000000'], dtype='datetime64[ns]') - acquisition_date_img2(mid_date)datetime64[ns]1986-10-29T03:29:35.021030976 .....

- description :

- acquisition date and time of image 2

- standard_name :

- image2_acquition_date

array(['1986-10-29T03:29:35.021030976', '1986-12-16T03:29:17.304025024', '1987-01-17T03:30:14.001025024', ..., '2024-11-03T04:18:38.999999744', '2024-11-03T04:18:38.999999744', '2024-11-03T04:18:38.999999744'], dtype='datetime64[ns]') - autoRIFT_software_version(mid_date)<U5'1.5.0' '1.5.0' ... '1.5.0' '1.5.0'

- description :

- version of autoRIFT software

- standard_name :

- autoRIFT_software_version

array(['1.5.0', '1.5.0', '1.5.0', ..., '1.5.0', '1.5.0', '1.5.0'], dtype='<U5') - chip_size_height(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- height of search template (chip)

- grid_mapping :

- mapping

- standard_name :

- chip_size_height

- units :

- m

- y_pixel_size :

- 10.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - chip_size_width(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- width of search template (chip)

- grid_mapping :

- mapping

- standard_name :

- chip_size_width

- units :

- m

- x_pixel_size :

- 10.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - date_center(mid_date)datetime64[ns]1986-09-11T03:31:14.142528 ... 2...

- description :

- midpoint of image 1 and image 2 acquisition date

- standard_name :

- image_pair_center_date

array(['1986-09-11T03:31:14.142528000', '1986-10-05T03:31:05.284025024', '1986-10-21T03:31:33.632525056', ..., '2024-10-29T04:18:09.000000000', '2024-10-29T04:18:09.000000000', '2024-10-29T04:18:09.000000000'], dtype='datetime64[ns]') - date_dt(mid_date)timedelta64[ns]95 days 23:56:41.586914063 ... 1...

- description :

- time separation between acquisition of image 1 and image 2

- standard_name :

- image_pair_time_separation

array([ 8294201586914063, 12441383789062504, 15206240478515621, ..., 864059985351558, 864059985351558, 864059985351558], dtype='timedelta64[ns]') - floatingice(y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- floating ice mask, 0 = non-floating-ice, 1 = floating-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- floating ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_floatingice.tif

array([[nan, nan, nan, ..., 0., nan, nan], [nan, nan, nan, ..., 0., 0., nan], [nan, nan, nan, ..., 0., 0., nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], dtype=float32) - granule_url(mid_date)<U1024'https://its-live-data.s3.amazon...

- description :

- original granule URL

- standard_name :

- granule_url

array(['https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LT05_L1TP_135039_19860725_20200918_02_T1_X_LT05_L1TP_135039_19861029_20200917_02_T1_G0120V02_P016.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LT05_L1TP_135039_19860725_20200918_02_T1_X_LT05_L1TP_135039_19861216_20200917_02_T1_G0120V02_P004.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LT05_L1TP_135039_19860725_20200918_02_T1_X_LT05_L1TP_135039_19870117_20201014_02_T1_G0120V02_P021.nc', ..., 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/sentinel2/v02/N30E090/S2B_MSIL1C_20241024T041739_N0511_R090_T46RFU_20241024T061238_X_S2B_MSIL1C_20241103T041839_N0511_R090_T46RFU_20241103T061311_G0120V02_P041.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/sentinel2/v02/N30E090/S2B_MSIL1C_20241024T041739_N0511_R090_T46RFV_20241024T061238_X_S2B_MSIL1C_20241103T041839_N0511_R090_T46RFV_20241103T061311_G0120V02_P085.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/sentinel2/v02/N30E090/S2B_MSIL1C_20241024T041739_N0511_R090_T46RGU_20241024T061238_X_S2B_MSIL1C_20241103T041839_N0511_R090_T46RGU_20241103T061311_G0120V02_P037.nc'], dtype='<U1024') - interp_mask(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- light interpolation mask

- flag_meanings :

- measured interpolated

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- interpolated_value_mask

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - landice(y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- land ice mask, 0 = non-land-ice, 1 = land-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- land ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_landice.tif

array([[nan, nan, nan, ..., 1., nan, nan], [nan, nan, nan, ..., 1., 1., nan], [nan, nan, nan, ..., 1., 1., nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], dtype=float32) - mapping()int640

- GeoTransform :

- 784192.5 120.0 0.0 3315847.5 0.0 -120.0

- crs_wkt :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- false_easting :

- 500000.0

- false_northing :

- 0.0

- geographic_crs_name :

- WGS 84

- grid_mapping_name :

- transverse_mercator

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 0.0

- longitude_of_central_meridian :

- 93.0

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- WGS 84 / UTM zone 46N

- reference_ellipsoid_name :

- WGS 84

- scale_factor_at_central_meridian :

- 0.9996

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- spatial_ref :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

array(0)

- mission_img1(mid_date)<U1'L' 'L' 'L' 'L' ... 'S' 'S' 'S' 'S'

- description :

- id of the mission that acquired image 1

- standard_name :

- image1_mission

array(['L', 'L', 'L', ..., 'S', 'S', 'S'], dtype='<U1')

- mission_img2(mid_date)<U1'L' 'L' 'L' 'L' ... 'S' 'S' 'S' 'S'

- description :

- id of the mission that acquired image 2

- standard_name :

- image2_mission

array(['L', 'L', 'L', ..., 'S', 'S', 'S'], dtype='<U1')

- roi_valid_percentage(mid_date)float3216.2 4.9 21.6 ... 41.1 85.0 37.4

- description :

- percentage of pixels with a valid velocity estimate determined for the intersection of the full image pair footprint and the region of interest (roi) that defines where autoRIFT tried to estimate a velocity

- standard_name :

- region_of_interest_valid_pixel_percentage

array([16.2, 4.9, 21.6, ..., 41.1, 85. , 37.4], dtype=float32)

- satellite_img1(mid_date)<U2'5' '5' '5' '5' ... '2B' '2B' '2B'

- description :

- id of the satellite that acquired image 1

- standard_name :

- image1_satellite

array(['5', '5', '5', ..., '2B', '2B', '2B'], dtype='<U2')

- satellite_img2(mid_date)<U2'5' '5' '5' '5' ... '2B' '2B' '2B'

- description :

- id of the satellite that acquired image 2

- standard_name :

- image2_satellite

array(['5', '5', '5', ..., '2B', '2B', '2B'], dtype='<U2')

- sensor_img1(mid_date)<U3'T' 'T' 'T' ... 'MSI' 'MSI' 'MSI'

- description :

- id of the sensor that acquired image 1

- standard_name :

- image1_sensor

array(['T', 'T', 'T', ..., 'MSI', 'MSI', 'MSI'], dtype='<U3')

- sensor_img2(mid_date)<U3'T' 'T' 'T' ... 'MSI' 'MSI' 'MSI'

- description :

- id of the sensor that acquired image 2

- standard_name :

- image2_sensor

array(['T', 'T', 'T', ..., 'MSI', 'MSI', 'MSI'], dtype='<U3')

- stable_count_slow(mid_date)uint1659322 31121 5472 ... 36329 17963

- description :

- number of valid pixels over slowest 25% of ice

- standard_name :

- stable_count_slow

- units :

- count

array([59322, 31121, 5472, ..., 24031, 36329, 17963], dtype=uint16)

- stable_count_stationary(mid_date)uint1657272 30584 2260 ... 34878 15661

- description :

- number of valid pixels over stationary or slow-flowing surfaces

- standard_name :

- stable_count_stationary

- units :

- count

array([57272, 30584, 2260, ..., 18749, 34878, 15661], dtype=uint16)

- stable_shift_flag(mid_date)uint81 1 1 1 1 1 1 1 ... 1 1 1 1 1 1 1 1

- description :

- flag for applying velocity bias correction: 0 = no correction; 1 = correction from overlapping stable surface mask (stationary or slow-flowing surfaces with velocity < 15 m/yr)(top priority); 2 = correction from slowest 25% of overlapping velocities (second priority)

- standard_name :

- stable_shift_flag

array([1, 1, 1, ..., 1, 1, 1], dtype=uint8)

- v(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- velocity magnitude

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_velocity

- units :

- meter/year

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - v_error(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- velocity magnitude error

- grid_mapping :

- mapping

- standard_name :

- velocity_error

- units :

- meter/year

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - va(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- velocity in radar azimuth direction

- grid_mapping :

- mapping

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - va_error(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- error for velocity in radar azimuth direction

- standard_name :

- va_error

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- va_error_modeled(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- va_error_modeled

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- va_error_slow(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- va_error_slow

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- va_error_stationary(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- va_error_stationary

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- va_stable_shift(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- applied va shift calibrated using pixels over stable or slow surfaces

- standard_name :

- va_stable_shift

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- va_stable_shift_slow(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- va shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- va_stable_shift_slow

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- va_stable_shift_stationary(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- va shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- va_stable_shift_stationary

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- velocity in radar range direction

- grid_mapping :

- mapping

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - vr_error(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- error for velocity in radar range direction

- standard_name :

- vr_error

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr_error_modeled(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vr_error_modeled

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr_error_slow(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vr_error_slow

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr_error_stationary(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vr_error_stationary

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr_stable_shift(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- applied vr shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vr_stable_shift

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr_stable_shift_slow(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- vr shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vr_stable_shift_slow

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vr_stable_shift_stationary(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- vr shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vr_stable_shift_stationary

- units :

- meter/year

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- vx(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- velocity component in x direction

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_x_velocity

- units :

- meter/year

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - vx_error(mid_date)float3227.4 23.3 17.7 ... 40.4 39.4 35.5

- description :

- best estimate of x_velocity error: vx_error is populated according to the approach used for the velocity bias correction as indicated in "stable_shift_flag"

- standard_name :

- vx_error

- units :

- meter/year

array([27.4, 23.3, 17.7, ..., 40.4, 39.4, 35.5], dtype=float32)

- vx_error_modeled(mid_date)float3297.0 64.6 52.9 ... 930.7 930.7

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vx_error_modeled

- units :

- meter/year

array([ 97. , 64.6, 52.9, ..., 930.7, 930.7, 930.7], dtype=float32)

- vx_error_slow(mid_date)float3227.3 23.3 17.7 ... 40.3 39.5 35.5

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vx_error_slow

- units :

- meter/year

array([27.3, 23.3, 17.7, ..., 40.3, 39.5, 35.5], dtype=float32)

- vx_error_stationary(mid_date)float3227.4 23.3 17.7 ... 40.4 39.4 35.5

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 meter/year identified from an external mask

- standard_name :

- vx_error_stationary

- units :

- meter/year

array([27.4, 23.3, 17.7, ..., 40.4, 39.4, 35.5], dtype=float32)

- vx_stable_shift(mid_date)float321.5 11.2 7.8 ... -1.4 -22.8 -22.8

- description :

- applied vx shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vx_stable_shift

- units :

- meter/year

array([ 1.5, 11.2, 7.8, ..., -1.4, -22.8, -22.8], dtype=float32)

- vx_stable_shift_slow(mid_date)float321.5 11.2 7.8 ... -1.3 -22.8 -22.8

- description :

- vx shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vx_stable_shift_slow

- units :

- meter/year

array([ 1.5, 11.2, 7.8, ..., -1.3, -22.8, -22.8], dtype=float32)

- vx_stable_shift_stationary(mid_date)float321.5 11.2 7.8 ... -1.4 -22.8 -22.8

- description :

- vx shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vx_stable_shift_stationary

- units :

- meter/year

array([ 1.5, 11.2, 7.8, ..., -1.4, -22.8, -22.8], dtype=float32)

- vy(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- velocity component in y direction

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_y_velocity

- units :

- meter/year

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - vy_error(mid_date)float3230.2 18.9 21.0 ... 62.6 61.6 50.1

- description :

- best estimate of y_velocity error: vy_error is populated according to the approach used for the velocity bias correction as indicated in "stable_shift_flag"

- standard_name :

- vy_error

- units :

- meter/year

array([30.2, 18.9, 21. , ..., 62.6, 61.6, 50.1], dtype=float32)

- vy_error_modeled(mid_date)float3297.0 64.6 52.9 ... 930.7 930.7

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vy_error_modeled

- units :

- meter/year

array([ 97. , 64.6, 52.9, ..., 930.7, 930.7, 930.7], dtype=float32)

- vy_error_slow(mid_date)float3230.3 18.9 20.9 ... 62.5 61.7 50.1

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vy_error_slow

- units :

- meter/year

array([30.3, 18.9, 20.9, ..., 62.5, 61.7, 50.1], dtype=float32)

- vy_error_stationary(mid_date)float3230.2 18.9 21.0 ... 62.6 61.6 50.1

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 meter/year identified from an external mask

- standard_name :

- vy_error_stationary

- units :

- meter/year

array([30.2, 18.9, 21. , ..., 62.6, 61.6, 50.1], dtype=float32)

- vy_stable_shift(mid_date)float324.1 -6.9 -2.0 ... 91.2 91.2 91.2

- description :

- applied vy shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vy_stable_shift

- units :

- meter/year

array([ 4.1, -6.9, -2. , ..., 91.2, 91.2, 91.2], dtype=float32)

- vy_stable_shift_slow(mid_date)float324.2 -6.9 -2.0 ... 91.2 91.2 91.2

- description :

- vy shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vy_stable_shift_slow

- units :

- meter/year

array([ 4.2, -6.9, -2. , ..., 91.2, 91.2, 91.2], dtype=float32)

- vy_stable_shift_stationary(mid_date)float324.1 -6.9 -2.0 ... 91.2 91.2 91.2

- description :

- vy shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vy_stable_shift_stationary

- units :

- meter/year

array([ 4.1, -6.9, -2. , ..., 91.2, 91.2, 91.2], dtype=float32)

- mid_datePandasIndex

PandasIndex(DatetimeIndex(['1986-09-11 03:31:15.003252992', '1986-10-05 03:31:06.144750016', '1986-10-21 03:31:34.493249984', '1986-11-22 03:29:27.023556992', '1986-11-30 03:29:08.710132992', '1986-12-08 03:29:55.372057024', '1986-12-08 03:33:17.095283968', '1986-12-16 03:30:10.645544', '1986-12-24 03:29:52.332120960', '1987-01-09 03:30:01.787228992', ... '2024-10-21 16:17:50.241008896', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-25 04:10:35.189837056', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024'], dtype='datetime64[ns]', name='mid_date', length=47892, freq=None)) - xPandasIndex

PandasIndex(Index([784252.5, 784372.5, 784492.5, 784612.5, 784732.5, 784852.5, 784972.5, 785092.5, 785212.5, 785332.5, 785452.5, 785572.5, 785692.5, 785812.5, 785932.5, 786052.5, 786172.5, 786292.5, 786412.5, 786532.5, 786652.5, 786772.5, 786892.5, 787012.5, 787132.5, 787252.5, 787372.5, 787492.5, 787612.5, 787732.5, 787852.5, 787972.5, 788092.5, 788212.5, 788332.5, 788452.5, 788572.5, 788692.5, 788812.5, 788932.5], dtype='float64', name='x')) - yPandasIndex

PandasIndex(Index([3315787.5, 3315667.5, 3315547.5, 3315427.5, 3315307.5, 3315187.5, 3315067.5, 3314947.5, 3314827.5, 3314707.5, 3314587.5, 3314467.5, 3314347.5, 3314227.5, 3314107.5, 3313987.5, 3313867.5, 3313747.5, 3313627.5, 3313507.5, 3313387.5, 3313267.5, 3313147.5, 3313027.5, 3312907.5, 3312787.5, 3312667.5, 3312547.5, 3312427.5, 3312307.5, 3312187.5, 3312067.5, 3311947.5, 3311827.5, 3311707.5, 3311587.5, 3311467.5], dtype='float64', name='y'))

- Conventions :

- CF-1.8

- GDAL_AREA_OR_POINT :

- Area

- author :

- ITS_LIVE, a NASA MEaSUREs project (its-live.jpl.nasa.gov)

- autoRIFT_parameter_file :

- http://its-live-data.s3.amazonaws.com/autorift_parameters/v001/autorift_landice_0120m.shp

- datacube_software_version :

- 1.0

- date_created :

- 25-Sep-2023 22:00:23

- date_updated :

- 13-Nov-2024 00:08:07

- geo_polygon :

- [[95.06959008486952, 29.814255053135895], [95.32812062059084, 29.809951334550703], [95.58659184122865, 29.80514261876954], [95.84499718862224, 29.7998293459177], [96.10333011481168, 29.79401200205343], [96.11032804508507, 30.019297601073085], [96.11740568350054, 30.244573983323825], [96.12456379063154, 30.469841094022847], [96.1318031397002, 30.695098878594504], [95.87110827645229, 30.70112924501256], [95.61033817656023, 30.7066371044805], [95.34949964126946, 30.711621947056347], [95.08859948278467, 30.716083310981194], [95.08376623410525, 30.49063893600811], [95.07898726183609, 30.26518607254204], [95.0742620484426, 30.039724763743482], [95.06959008486952, 29.814255053135895]]

- institution :

- NASA Jet Propulsion Laboratory (JPL), California Institute of Technology

- latitude :

- 30.26

- longitude :

- 95.6

- proj_polygon :

- [[700000, 3300000], [725000.0, 3300000.0], [750000.0, 3300000.0], [775000.0, 3300000.0], [800000, 3300000], [800000.0, 3325000.0], [800000.0, 3350000.0], [800000.0, 3375000.0], [800000, 3400000], [775000.0, 3400000.0], [750000.0, 3400000.0], [725000.0, 3400000.0], [700000, 3400000], [700000.0, 3375000.0], [700000.0, 3350000.0], [700000.0, 3325000.0], [700000, 3300000]]

- projection :

- 32646

- s3 :

- s3://its-live-data/datacubes/v2/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.zarr

- skipped_granules :

- s3://its-live-data/datacubes/v2/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.json

- time_standard_img1 :

- UTC

- time_standard_img2 :

- UTC

- title :

- ITS_LIVE datacube of image pair velocities

- url :

- https://its-live-data.s3.amazonaws.com/datacubes/v2/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.zarr

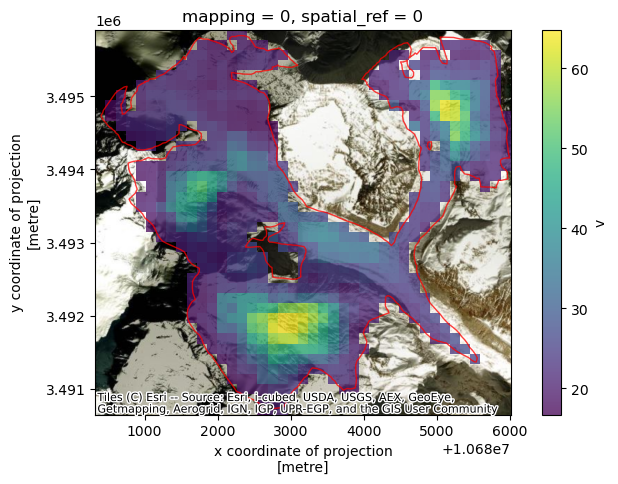

As a quick sanity check, we’ll convince ourselves that the clipping operation in the previous notebook worked correctly. We also show that we can plot both Xarray raster data and GeoPandas vector data overlaid on the same plot with a satellite image basemap as a background.

A few notes about the following figure:

single_glacier_rasteris a 3-dimensional object. We want to plot it in 2-d space with the RGI glacier outline. So, we perform a reduction (in this case, compute the mean), in order to reduce the dataset from 3-d to 2-d (Another option would be to select a single time step).We could make this plot with a white background, but it is also nice to be able to add a base map to the image. Here, we’ll use contextily to do so. This will require converting the coordinate reference system (CRS) of both objects to the Web Mercator projection (EPSG:3857). We first need to use

rio.write_crs()to assign a CRS to the raster object (Note: the data is already projected in the correct CRS, the object is just not ‘aware’ of its CRS. This is necessary for reprojection operations. For more, see Rioxarray’s CRS Management documentation).

#Write CRS of raster data

single_glacier_raster = single_glacier_raster.rio.write_crs(single_glacier_raster.attrs['projection'])

#Check that CRS of vector and raster data are the same

assert single_glacier_raster.rio.crs == single_glacier_vector.crs

#Reproject both objects to web mercator

single_glacier_vector_web = single_glacier_vector.to_crs('EPSG:3857')

single_glacier_raster_web = single_glacier_raster.rio.reproject('EPSG:3857')

fig, ax = plt.subplots(figsize=(8,5))

#Plot objects

single_glacier_raster_web.v.mean(dim='mid_date').plot(ax=ax, cmap='viridis', alpha=0.75, add_colorbar=True)

single_glacier_vector_web.plot(ax=ax, facecolor='None', edgecolor='red', alpha=0.75)

#Add basemap

cx.add_basemap(ax, crs=single_glacier_vector_web.crs, source=cx.providers.Esri.WorldImagery)

;

''

We sorted along the time dimension in the previous notebook, so it should be in chronological order.

single_glacier_raster.mid_date

<xarray.DataArray 'mid_date' (mid_date: 47892)> Size: 383kB

array(['1986-09-11T03:31:15.003252992', '1986-10-05T03:31:06.144750016',

'1986-10-21T03:31:34.493249984', ..., '2024-10-29T04:18:09.241024000',

'2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000'],

dtype='datetime64[ns]')

Coordinates:

mapping int64 8B 0

* mid_date (mid_date) datetime64[ns] 383kB 1986-09-11T03:31:15.00325299...

spatial_ref int64 8B 0

Attributes:

description: midpoint of image 1 and image 2 acquisition date and time...

standard_name: image_pair_center_date_with_time_separation- mid_date: 47892

- 1986-09-11T03:31:15.003252992 ... 2024-10-29T04:18:09.241024

array(['1986-09-11T03:31:15.003252992', '1986-10-05T03:31:06.144750016', '1986-10-21T03:31:34.493249984', ..., '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000'], dtype='datetime64[ns]') - mapping()int640

- crs_wkt :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- inverse_flattening :

- 298.257223563

- reference_ellipsoid_name :

- WGS 84

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- geographic_crs_name :

- WGS 84

- horizontal_datum_name :

- World Geodetic System 1984

- projected_crs_name :

- WGS 84 / UTM zone 46N

- grid_mapping_name :

- transverse_mercator

- latitude_of_projection_origin :

- 0.0

- longitude_of_central_meridian :

- 93.0

- false_easting :

- 500000.0

- false_northing :

- 0.0

- scale_factor_at_central_meridian :

- 0.9996

- spatial_ref :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

array(0)

- mid_date(mid_date)datetime64[ns]1986-09-11T03:31:15.003252992 .....

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

array(['1986-09-11T03:31:15.003252992', '1986-10-05T03:31:06.144750016', '1986-10-21T03:31:34.493249984', ..., '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000'], dtype='datetime64[ns]') - spatial_ref()int640

- GeoTransform :

- 784192.5 120.0 0.0 3315847.5 0.0 -120.0

array(0)

- mid_datePandasIndex

PandasIndex(DatetimeIndex(['1986-09-11 03:31:15.003252992', '1986-10-05 03:31:06.144750016', '1986-10-21 03:31:34.493249984', '1986-11-22 03:29:27.023556992', '1986-11-30 03:29:08.710132992', '1986-12-08 03:29:55.372057024', '1986-12-08 03:33:17.095283968', '1986-12-16 03:30:10.645544', '1986-12-24 03:29:52.332120960', '1987-01-09 03:30:01.787228992', ... '2024-10-21 16:17:50.241008896', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-25 04:10:35.189837056', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024'], dtype='datetime64[ns]', name='mid_date', length=47892, freq=None))

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

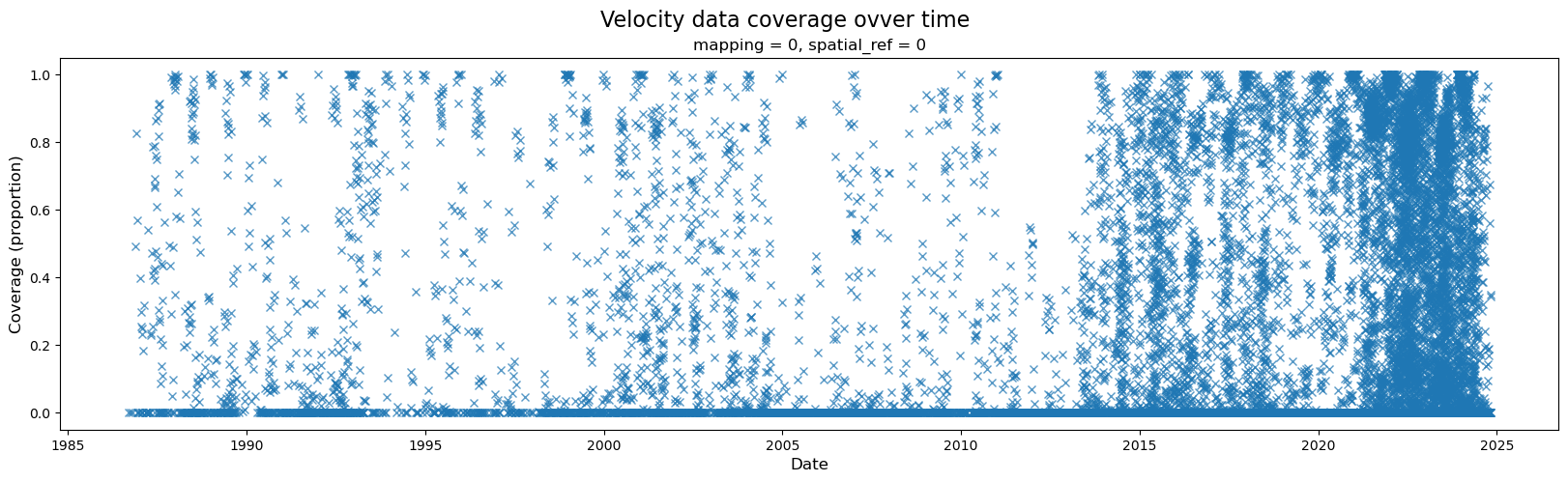

2) Examine data coverage#

A wide variety of forces can impact both satellite imagery and the ability of ITS_LIVE’s feature tracking algorithm to extract velocity estimates from satellite image pairs. For these reasons, there are at times both gaps in coverage and ranges in the estimated error associated with different observations. The following section will demonstrate how to calculate and visualize coverage of the dataset over time. Part 2 will include a discussion of uncertainty and error estimates

When first investigating a dataset, it is helpful to be able to scan/quickly visualize coverage along a given dimension. To create the data needed for such a visualization, we first need a mask that will tell us all possible ‘valid’ pixels; in other words, we need to differentiate between pixels in our 2-d rectangular array that represent ice v. non-ice. Then, for every time step, we can calculate the portion of possible ice pixels that contain an estimated velocity value.

#calculate number of valid pixels

valid_pixels = single_glacier_raster.v.count(dim=['x','y'])

#calculate max. number of valid pixels

valid_pixels_max = single_glacier_raster.v.notnull().any('mid_date').sum(['x','y'])

#add cov proportion to dataset as variable

single_glacier_raster['cov'] = valid_pixels/ valid_pixels_max

Now we can visualize coverage over time:

fig, ax = plt.subplots(figsize=(20,5))

#Plot object

single_glacier_raster['cov'].plot(ax=ax, linestyle='None', marker='x',alpha=0.75)

#Specify axes labels and title

fig.suptitle('Velocity data coverage ovver time', fontsize=16)

ax.set_ylabel('Coverage (proportion)', x=-0.05, fontsize=12)

ax.set_xlabel('Date', fontsize=12);

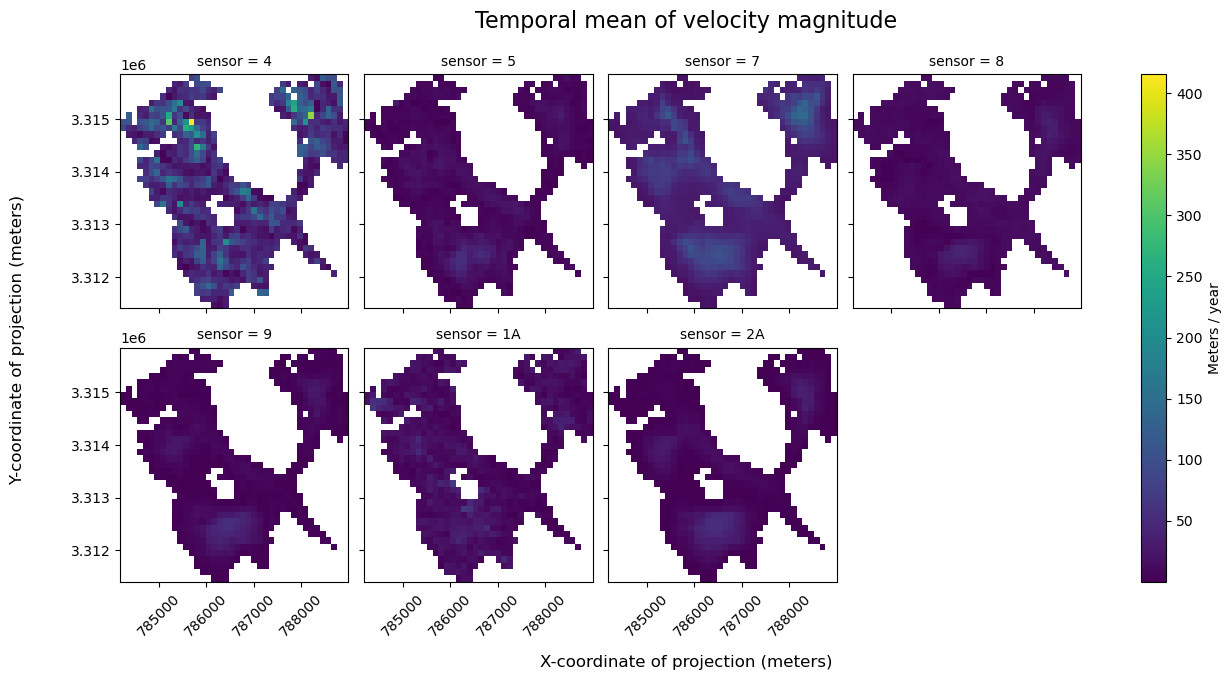

3) Break down by sensor#

In this dataset, we have a dense time series of velocity observations for a given glacier (~48,000 observatioons from 1986-2024 note: this notebook was last updated in 2025). However, we know that the ability of satellite imagery pairs to capture ice displacement (and by extension, velocity), can be impacted by conditions such as cloud-cover, which obscure Earth’s surface from optical sensors. ITS_LIVE is a multi-sensor ice velocity dataset, meaning that it is composed of ice velocity observations derived from a number of satellites, which include both optical and Synthetic Aperture Radar (SAR) imagery. Currently, Sentinel-1 is the only SAR sensor included in ITS_LIVE, all others are optical.

While optical imagery requires solar illumination and can be impacted by cloud cover, Sentinel-1 is an active remote sensing technique and images in a longer wavelength (C-band, ~ 5 cm). This means that Sentinel-1 imagery does not require solar illumination, and can penetrate through cloud cover. Because of these sensors differing sensitivity to Earth’s surface conditions, there can sometimes be discrapancies in velocity data observed from different sensors.

Note

There are many great resources available for understanding the principles of SAR and working with SAR imagery. The appendix at the bottom of this notebook lists a few of them. In addition, Chapter 2 of this tutorial focuses on working with a dataset of Sentinel-1 imagery.

Let’s first look at what sensors are represented in the time series:

sensors = list(set(single_glacier_raster.satellite_img1.values))

sensors

['5', '7', '2A', '8', '9', '2B', '1A', '4']

To extract observations from a single satellite sensor, we will use Xarray indexing and selection methods such as .where() and .sel(). The following cells demonstrate different selection approaches and a brief discussion of the pros and cons of each when working with large and/ or sparse datasets.

Landsat 8#

First, looking at velocity observations from Landsat8 data only:

%%time

l8_data = single_glacier_raster.where(single_glacier_raster['satellite_img1'] == '8', drop=True)

l8_data

CPU times: user 383 ms, sys: 3.54 s, total: 3.92 s

Wall time: 4.43 s

<xarray.Dataset> Size: 208MB

Dimensions: (mid_date: 2688, y: 37, x: 40)

Coordinates:

mapping int64 8B 0

* mid_date (mid_date) datetime64[ns] 22kB 2013-05-20T04:...

spatial_ref int64 8B 0

* x (x) float64 320B 7.843e+05 ... 7.889e+05

* y (y) float64 296B 3.316e+06 ... 3.311e+06

Data variables: (12/60)

M11 (mid_date, y, x) float32 16MB nan nan ... nan

M11_dr_to_vr_factor (mid_date) float32 11kB nan nan nan ... nan nan

M12 (mid_date, y, x) float32 16MB nan nan ... nan

M12_dr_to_vr_factor (mid_date) float32 11kB nan nan nan ... nan nan

acquisition_date_img1 (mid_date) datetime64[ns] 22kB 2013-04-30T04:...

acquisition_date_img2 (mid_date) datetime64[ns] 22kB 2013-06-09T04:...

... ...

vy_error_slow (mid_date) float32 11kB 40.2 10.2 ... 26.5 159.6

vy_error_stationary (mid_date) float32 11kB 40.2 10.2 ... 26.5 159.6

vy_stable_shift (mid_date) float32 11kB -40.2 3.6 ... 17.1 152.1

vy_stable_shift_slow (mid_date) float32 11kB -40.2 3.6 ... 17.1 152.3

vy_stable_shift_stationary (mid_date) float32 11kB -40.2 3.6 ... 17.1 152.1

cov (mid_date) float64 22kB 0.0 0.5969 ... 0.3494

Attributes: (12/19)

Conventions: CF-1.8

GDAL_AREA_OR_POINT: Area

author: ITS_LIVE, a NASA MEaSUREs project (its-live.j...

autoRIFT_parameter_file: http://its-live-data.s3.amazonaws.com/autorif...

datacube_software_version: 1.0

date_created: 25-Sep-2023 22:00:23

... ...

s3: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

skipped_granules: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

time_standard_img1: UTC

time_standard_img2: UTC

title: ITS_LIVE datacube of image pair velocities

url: https://its-live-data.s3.amazonaws.com/datacu...- mid_date: 2688

- y: 37

- x: 40

- mapping()int640

- crs_wkt :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- inverse_flattening :

- 298.257223563

- reference_ellipsoid_name :

- WGS 84

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- geographic_crs_name :

- WGS 84

- horizontal_datum_name :

- World Geodetic System 1984

- projected_crs_name :

- WGS 84 / UTM zone 46N

- grid_mapping_name :

- transverse_mercator

- latitude_of_projection_origin :

- 0.0

- longitude_of_central_meridian :

- 93.0

- false_easting :

- 500000.0

- false_northing :

- 0.0

- scale_factor_at_central_meridian :

- 0.9996

- spatial_ref :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

array(0)

- mid_date(mid_date)datetime64[ns]2013-05-20T04:09:12.113346048 .....

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

array(['2013-05-20T04:09:12.113346048', '2013-06-17T04:12:21.907150080', '2013-06-21T04:08:58.673577984', ..., '2024-09-23T04:10:24.512207104', '2024-10-09T04:10:30.654570240', '2024-10-25T04:10:35.189837056'], dtype='datetime64[ns]') - spatial_ref()int640

- GeoTransform :

- 784192.5 120.0 0.0 3315847.5 0.0 -120.0

array(0)

- x(x)float647.843e+05 7.844e+05 ... 7.889e+05

- axis :

- X

- description :

- x coordinate of projection

- long_name :

- x coordinate of projection

- standard_name :

- projection_x_coordinate

- units :

- metre

array([784252.5, 784372.5, 784492.5, 784612.5, 784732.5, 784852.5, 784972.5, 785092.5, 785212.5, 785332.5, 785452.5, 785572.5, 785692.5, 785812.5, 785932.5, 786052.5, 786172.5, 786292.5, 786412.5, 786532.5, 786652.5, 786772.5, 786892.5, 787012.5, 787132.5, 787252.5, 787372.5, 787492.5, 787612.5, 787732.5, 787852.5, 787972.5, 788092.5, 788212.5, 788332.5, 788452.5, 788572.5, 788692.5, 788812.5, 788932.5]) - y(y)float643.316e+06 3.316e+06 ... 3.311e+06

- axis :

- Y

- description :

- y coordinate of projection

- long_name :

- y coordinate of projection

- standard_name :

- projection_y_coordinate

- units :

- metre

array([3315787.5, 3315667.5, 3315547.5, 3315427.5, 3315307.5, 3315187.5, 3315067.5, 3314947.5, 3314827.5, 3314707.5, 3314587.5, 3314467.5, 3314347.5, 3314227.5, 3314107.5, 3313987.5, 3313867.5, 3313747.5, 3313627.5, 3313507.5, 3313387.5, 3313267.5, 3313147.5, 3313027.5, 3312907.5, 3312787.5, 3312667.5, 3312547.5, 3312427.5, 3312307.5, 3312187.5, 3312067.5, 3311947.5, 3311827.5, 3311707.5, 3311587.5, 3311467.5])

- M11(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- conversion matrix element (1st row, 1st column) that can be multiplied with vx to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- standard_name :

- conversion_matrix_element_11

- units :

- pixel/(meter/year)

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - M11_dr_to_vr_factor(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M11_dr_to_vr_factor

- units :

- meter/(year*pixel)

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- M12(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- conversion matrix element (1st row, 2nd column) that can be multiplied with vy to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- standard_name :

- conversion_matrix_element_12

- units :

- pixel/(meter/year)

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - M12_dr_to_vr_factor(mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M12_dr_to_vr_factor

- units :

- meter/(year*pixel)

array([nan, nan, nan, ..., nan, nan, nan], dtype=float32)

- acquisition_date_img1(mid_date)datetime64[ns]2013-04-30T04:12:14.819000064 .....

- description :

- acquisition date and time of image 1

- standard_name :

- image1_acquition_date

array(['2013-04-30T04:12:14.819000064', '2013-04-30T04:12:14.819000064', '2013-04-30T04:12:14.819000064', ..., '2024-08-18T04:10:11.608381696', '2024-09-19T04:10:23.892904960', '2024-10-21T04:10:32.963236096'], dtype='datetime64[ns]') - acquisition_date_img2(mid_date)datetime64[ns]2013-06-09T04:06:09.146831104 .....

- description :

- acquisition date and time of image 2

- standard_name :

- image2_acquition_date

array(['2013-06-09T04:06:09.146831104', '2013-08-04T04:12:28.734438912', '2013-08-12T04:05:42.267294976', ..., '2024-10-29T04:10:36.934395904', '2024-10-29T04:10:36.934395904', '2024-10-29T04:10:36.934395904'], dtype='datetime64[ns]') - autoRIFT_software_version(mid_date)object'1.5.0' '1.5.0' ... '1.5.0' '1.5.0'

- description :

- version of autoRIFT software

- standard_name :

- autoRIFT_software_version

array(['1.5.0', '1.5.0', '1.5.0', ..., '1.5.0', '1.5.0', '1.5.0'], dtype=object) - chip_size_height(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- height of search template (chip)

- standard_name :

- chip_size_height

- units :

- m

- y_pixel_size :

- 10.0

array([[[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., 240., nan, nan], [ nan, nan, nan, ..., 480., 240., nan], [ nan, nan, nan, ..., 480., 480., nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., 480., nan, nan], [ nan, nan, nan, ..., 480., nan, nan], [ nan, nan, nan, ..., 480., nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - chip_size_width(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- width of search template (chip)

- standard_name :

- chip_size_width

- units :

- m

- x_pixel_size :

- 10.0

array([[[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., 240., nan, nan], [ nan, nan, nan, ..., 480., 240., nan], [ nan, nan, nan, ..., 480., 480., nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., 480., nan, nan], [ nan, nan, nan, ..., 480., nan, nan], [ nan, nan, nan, ..., 480., nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - date_center(mid_date)datetime64[ns]2013-05-20T04:09:11.982916096 .....

- description :

- midpoint of image 1 and image 2 acquisition date

- standard_name :

- image_pair_center_date

array(['2013-05-20T04:09:11.982916096', '2013-06-17T04:12:21.776719872', '2013-06-21T04:08:58.543148032', ..., '2024-09-23T04:10:24.271388928', '2024-10-09T04:10:30.413650944', '2024-10-25T04:10:34.948815872'], dtype='datetime64[ns]') - date_dt(mid_date)timedelta64[ns]39 days 23:53:54.484863279 ... 8...

- description :

- time separation between acquisition of image 1 and image 2

- standard_name :

- image_pair_time_separation

array([3455634484863279, 8294413842773441, 8985207128906252, ..., 6220825048828126, 3456013183593747, 691203955078126], dtype='timedelta64[ns]') - floatingice(y, x, mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- floating ice mask, 0 = non-floating-ice, 1 = floating-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- standard_name :

- floating ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_floatingice.tif

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [ 0., 0., 0., ..., 0., 0., 0.], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - granule_url(mid_date)object'https://its-live-data.s3.amazon...

- description :

- original granule URL

- standard_name :

- granule_url

array(['https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LC08_L1TP_135039_20130430_20200913_02_T1_X_LE07_L1TP_135039_20130609_20200907_02_T1_G0120V02_P012.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LC08_L1TP_135039_20130430_20200913_02_T1_X_LC08_L1TP_135039_20130804_20200912_02_T1_G0120V02_P024.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LC08_L1TP_135039_20130430_20200913_02_T1_X_LE07_L1TP_135039_20130812_20200907_02_T1_G0120V02_P029.nc', ..., 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LC08_L1TP_135039_20240818_20240823_02_T1_X_LC09_L1TP_135039_20241029_20241029_02_T1_G0120V02_P014.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LC08_L1TP_135039_20240919_20240927_02_T1_X_LC09_L1TP_135039_20241029_20241029_02_T1_G0120V02_P012.nc', 'https://its-live-data.s3.amazonaws.com/velocity_image_pair/landsatOLI/v02/N30E090/LC08_L1TP_135039_20241021_20241029_02_T1_X_LC09_L1TP_135039_20241029_20241029_02_T1_G0120V02_P020.nc'], dtype=object) - interp_mask(mid_date, y, x)float32nan nan nan nan ... nan nan nan nan

- description :

- light interpolation mask

- flag_meanings :

- measured interpolated

- flag_values :

- [0, 1]

- standard_name :

- interpolated_value_mask

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., 1., nan, nan], [nan, nan, nan, ..., 1., 1., nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., 1., nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - landice(y, x, mid_date)float32nan nan nan nan ... nan nan nan nan

- description :

- land ice mask, 0 = non-land-ice, 1 = land-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- standard_name :

- land ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_landice.tif

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [ 1., 1., 1., ..., 1., 1., 1.], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [ 1., 1., 1., ..., 1., 1., 1.], [ 1., 1., 1., ..., 1., 1., 1.], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - mission_img1(mid_date)object'L' 'L' 'L' 'L' ... 'L' 'L' 'L' 'L'

- description :

- id of the mission that acquired image 1

- standard_name :

- image1_mission

array(['L', 'L', 'L', ..., 'L', 'L', 'L'], dtype=object)

- mission_img2(mid_date)object'L' 'L' 'L' 'L' ... 'L' 'L' 'L' 'L'

- description :

- id of the mission that acquired image 2