3. Individual glacier data inspection#

This notebook will walk through steps to read in and organize velocity data and clip it to the extent of a single glacier. The tools we will use include xarray, rioxarray, geopandas, and flox.

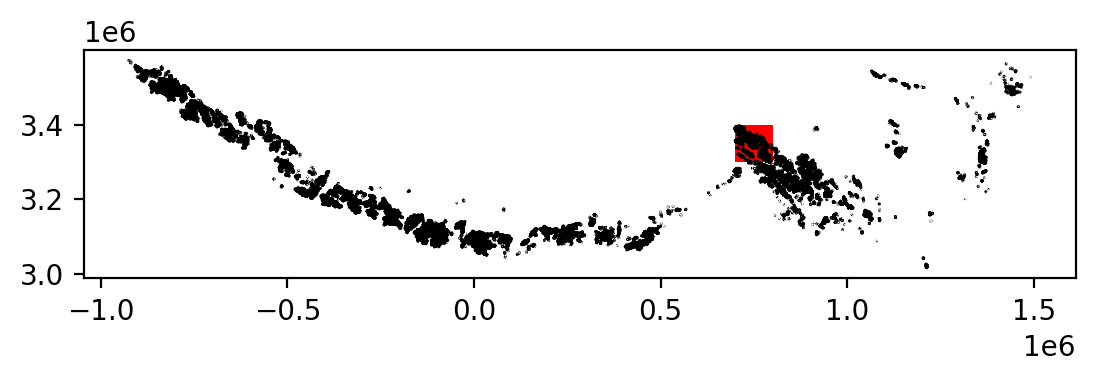

To clip ITS_LIVE data to the extent of a single glacier, we will use a vector dataset of glacier outlines, the Randolph Glacier Inventory.

Learning goals#

Concepts#

Subset large raster dataset to area of interest using vector data

Examine different types of data stored within a raster object (in this example, the data is in the format of a zarr datacube

Handling different coordinate reference systems and projections

Dataset inspection using:

Xarray label- and index-based selection

Grouped computations and reductions

Visualization tools

Dimensional reductions and computations

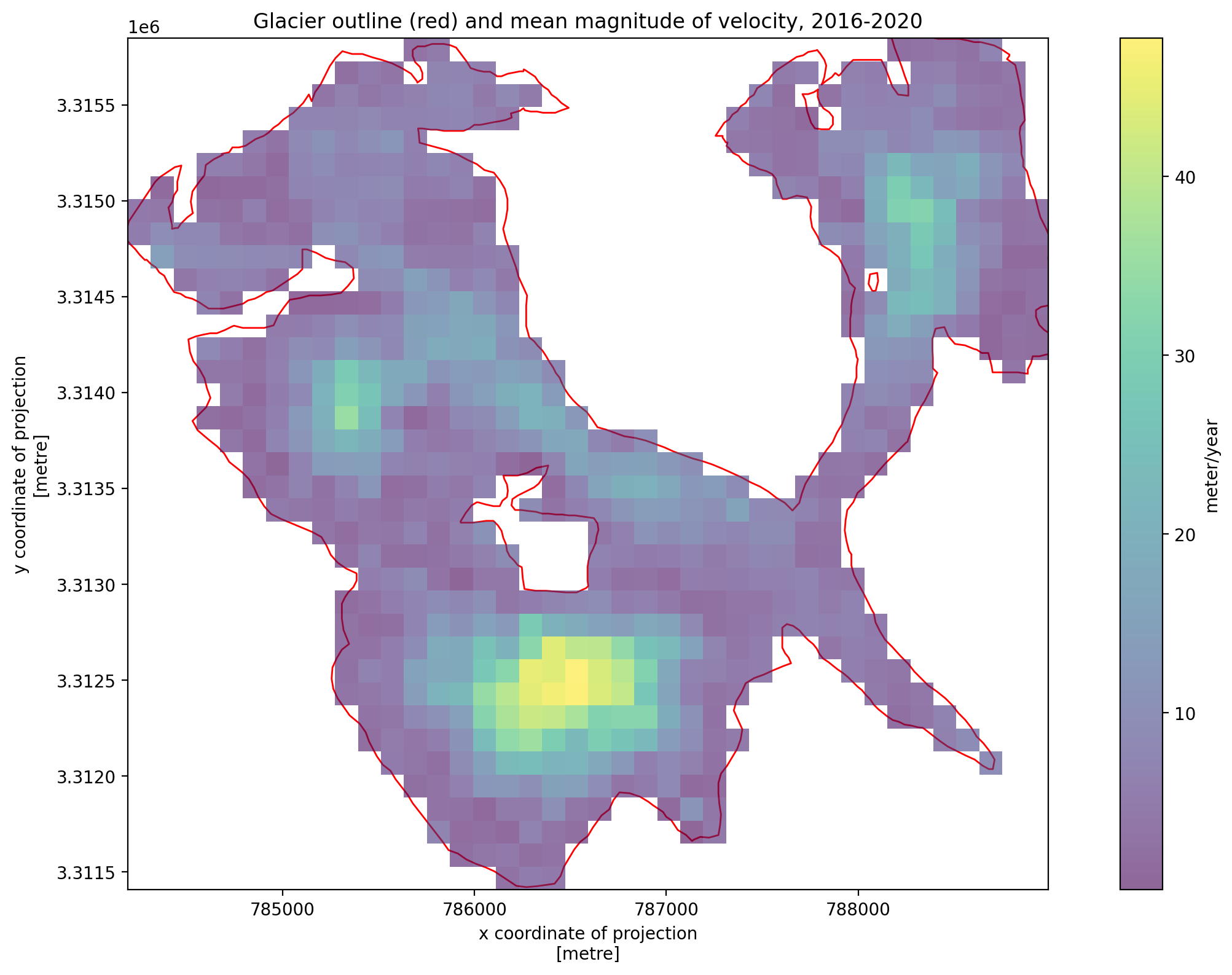

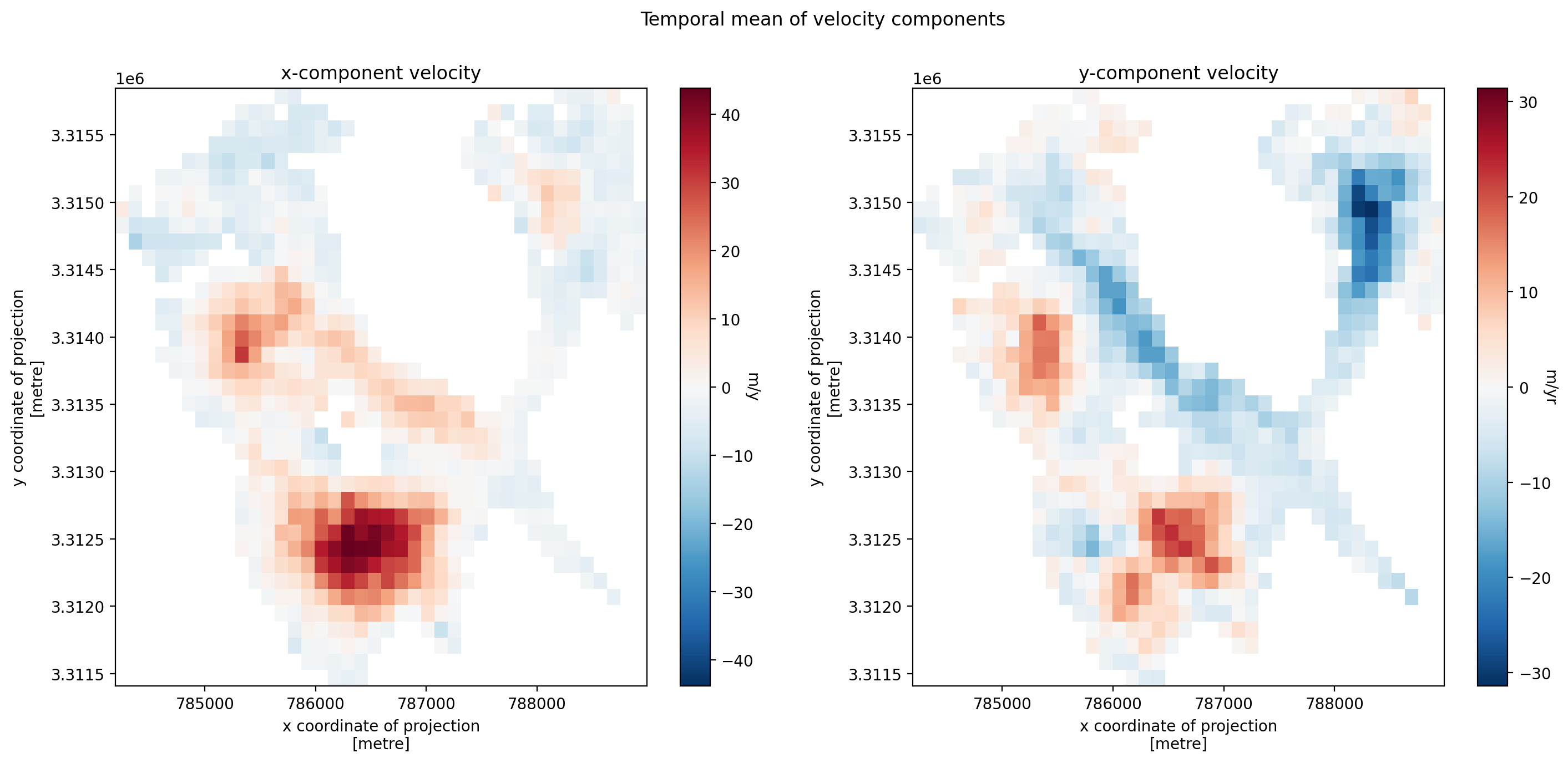

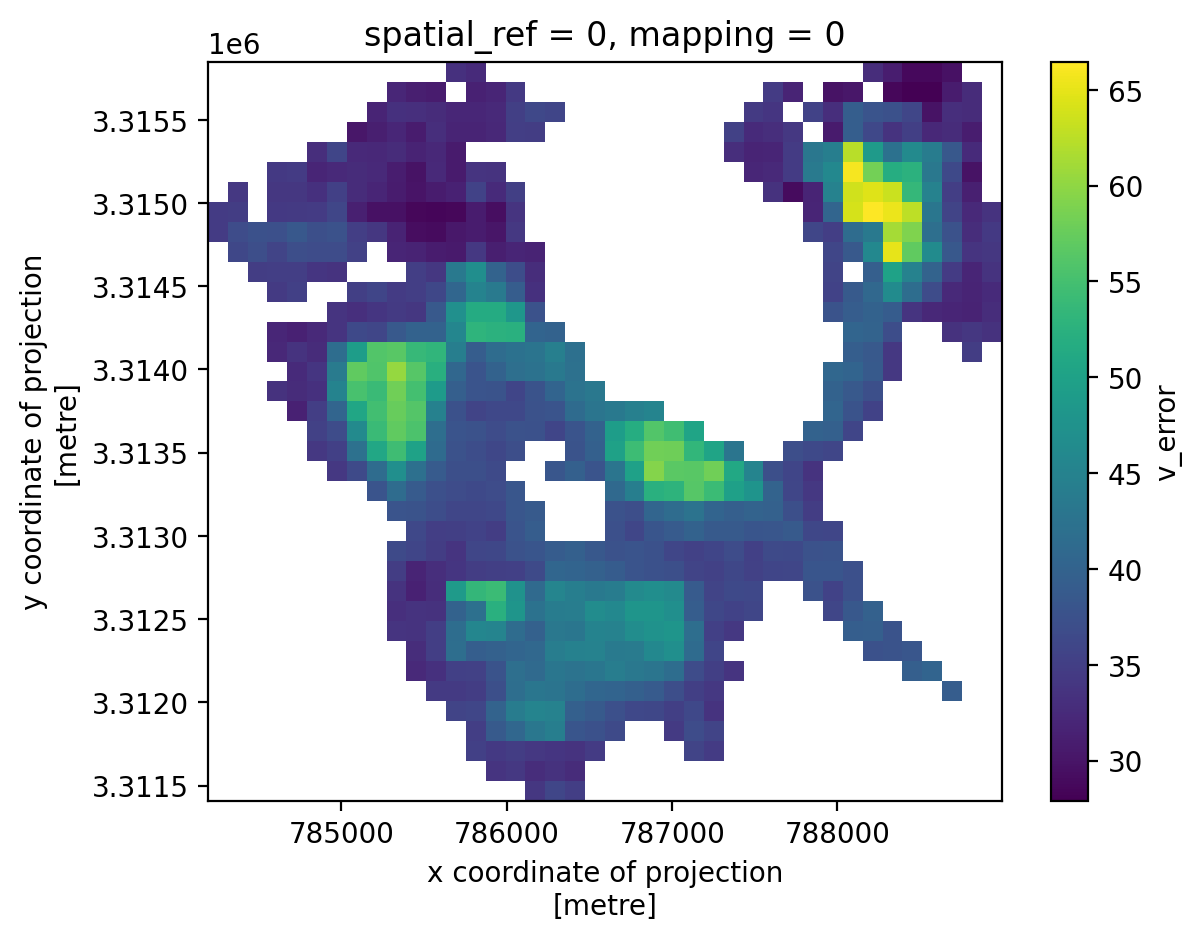

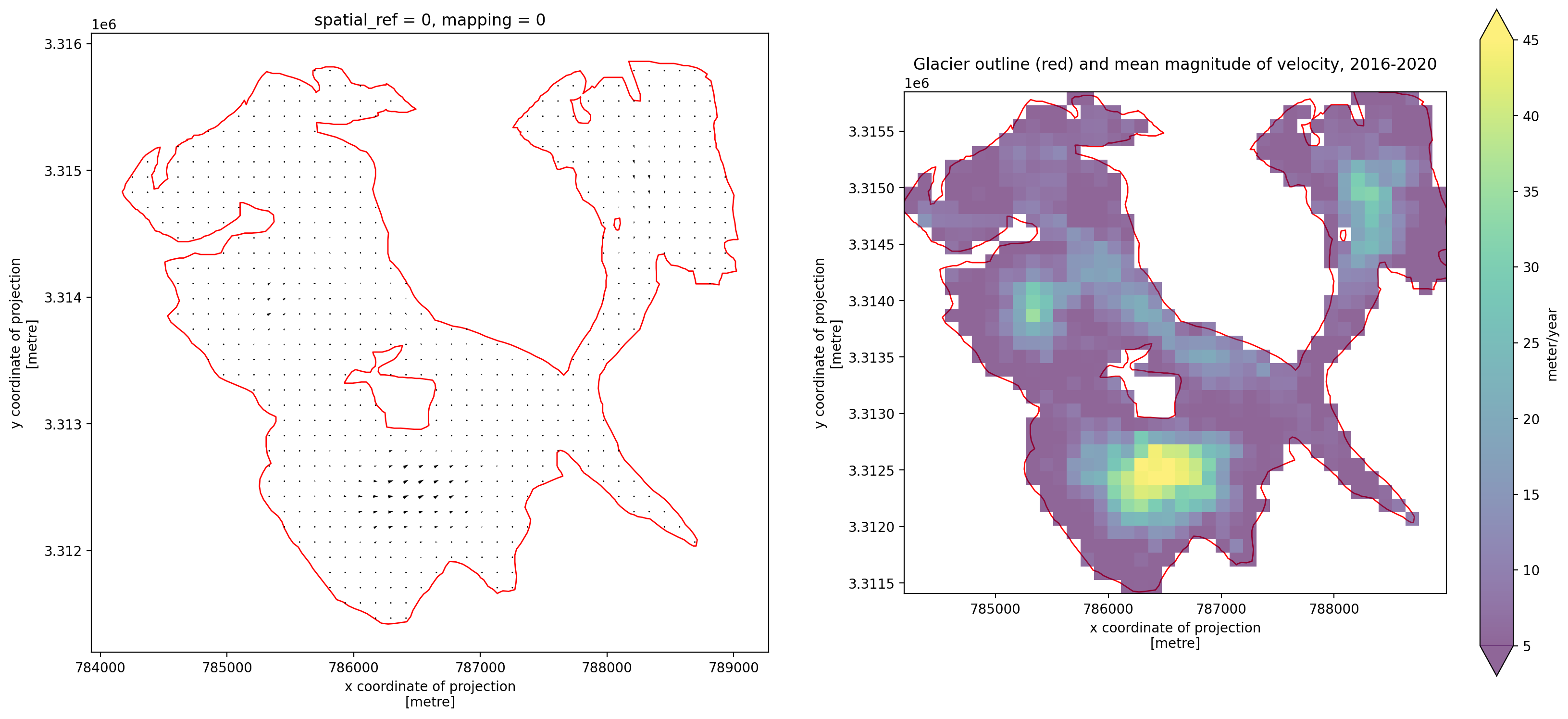

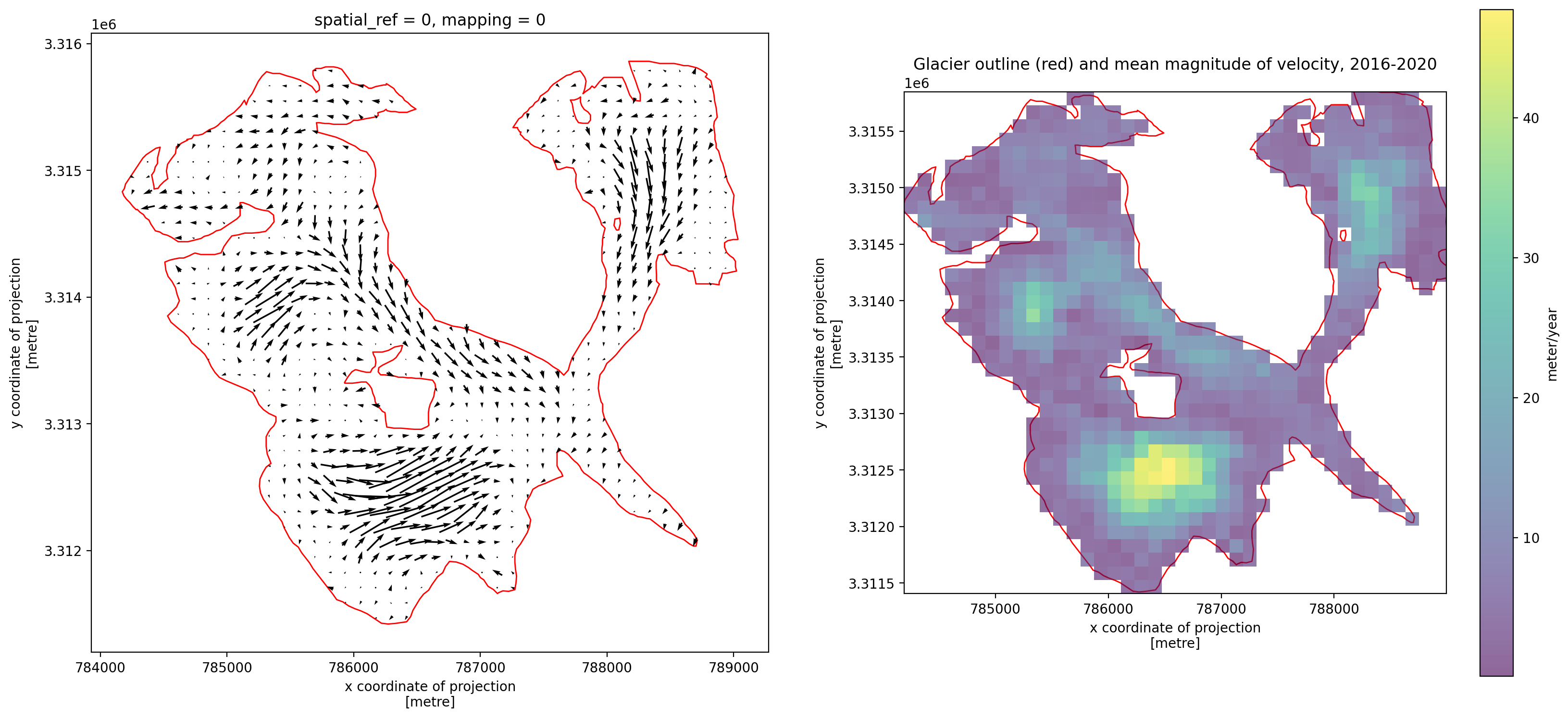

Examining velocity component vectors

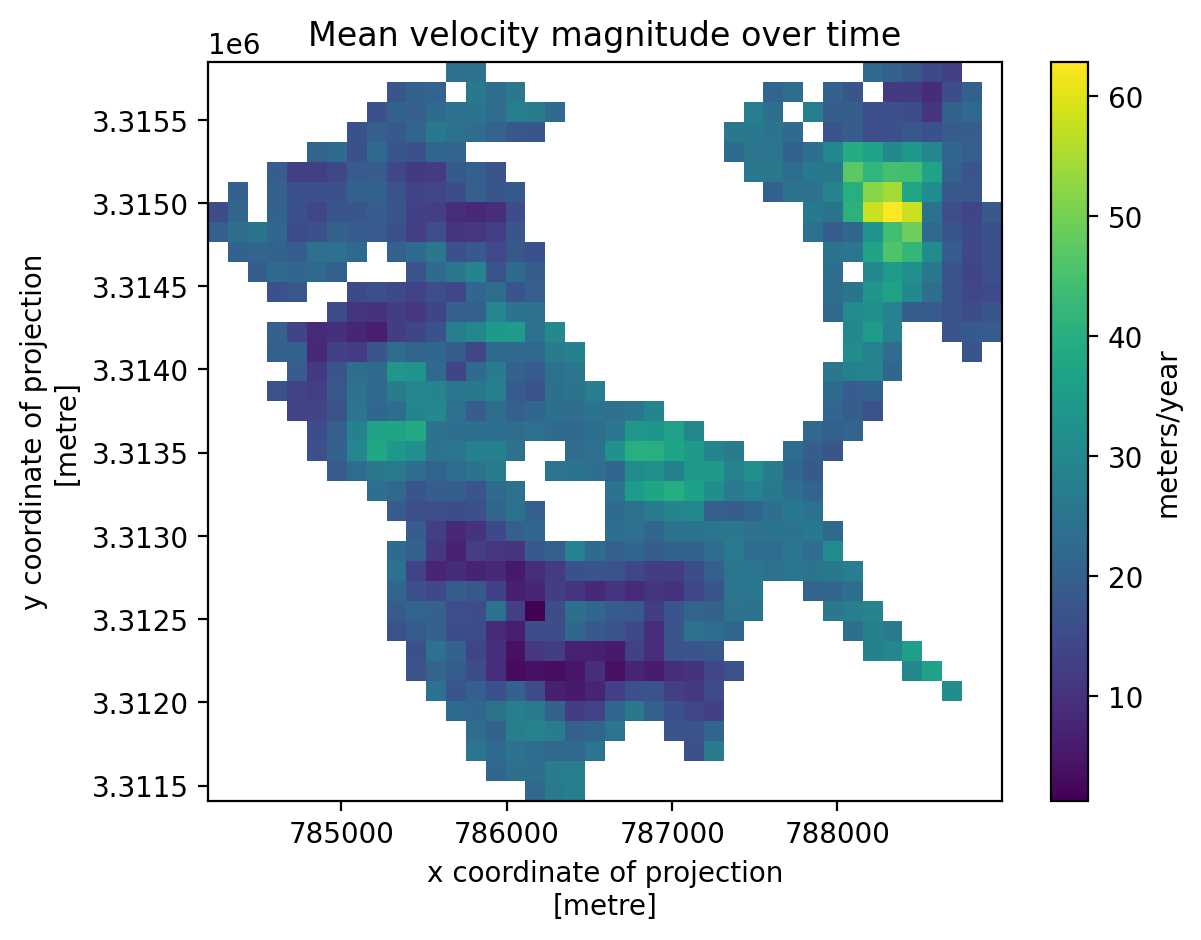

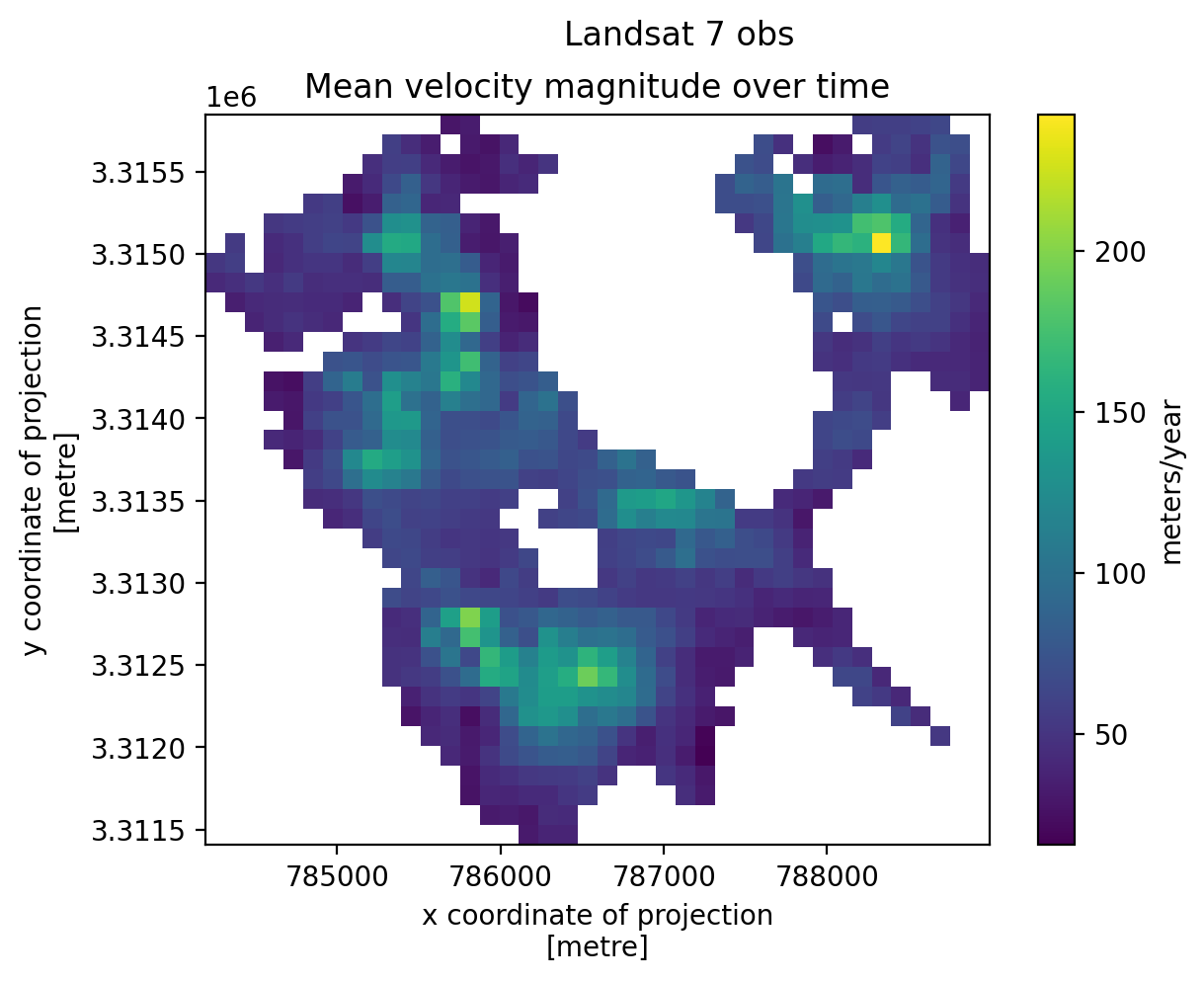

Calculating the magnitude of the displacement vector from velocity component vectors

Techniques#

Read in raster data using

xarrayRead in vector data using

geopandasManipulate and organize raster data using

xarrayfunctionalityExplore larger-than-memory data using

daskandxarrayTroubleshooting errors and warnings

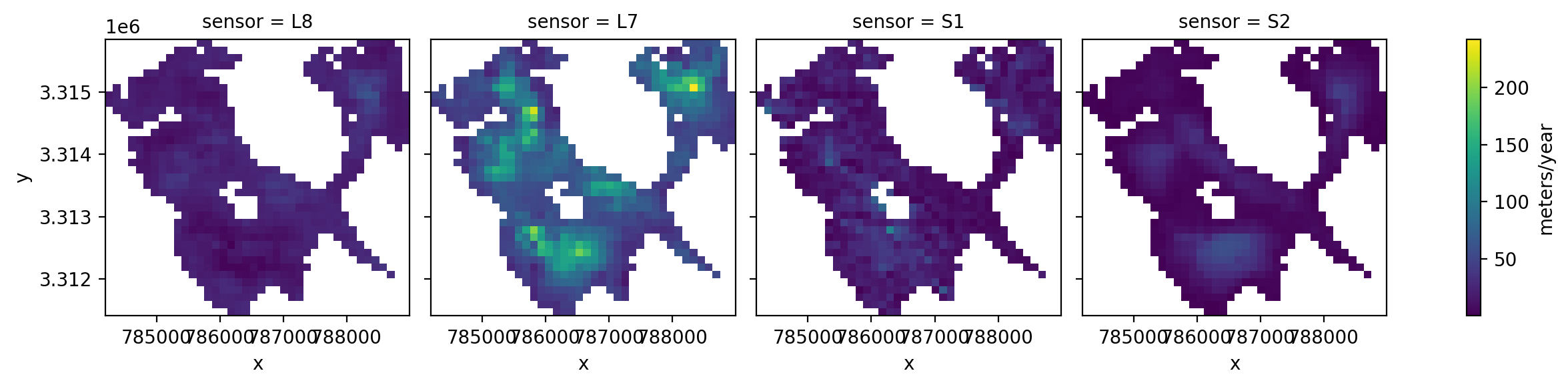

Visualizing Xarray data using FacetGrid objects

Other useful resources#

These are resources that contain additional examples and discussion of the content in this notebook and more.

How do I… this is very helpful!

Xarray High-level computational patterns discussion of concepts and associated code examples

Parallel computing with dask Xarray tutorial demonstrating wrapping of dask arrays

Software + Setup#

First, let’s install the python libraries that we’ll need for this notebook:

%xmode minimal

Exception reporting mode: Minimal

import json

import os

import urllib.request

import cartopy

import geopandas as gpd

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import rioxarray as rxr

import xarray as xr

from shapely.geometry import MultiPolygon, Point, Polygon

%config InlineBackend.figure_format='retina'

import logging

import psutil

from dask.distributed import Client, LocalCluster

#cluster = LocalCluster(

# n_workers = psutil.cpu_count(logical=True)-1,

# silence_logs = logging.ERROR,

# threads_per_worker=1,

#)

#client = Client(cluster)

#client

Reading in ITS_LIVE ice velocity dataset (raster data)#

We will use some of the functions we defined in the data access notebook in this notebook and others within this tutorial. They are all stored in the itslivetools.py file. If you cloned this tutorial from its github repository you’ll see that itslivetools.py is in the same directory as our current notebook, so we can import it with the following line:

import itslivetools

First, let’s read in the catalog again:

itslive_catalog = gpd.read_file('https://its-live-data.s3.amazonaws.com/datacubes/catalog_v02.json')

Next, we’ll use the gind_granule_by_point() and read_in_s3() functions to read in the ITS_LIVE zarr datacube as an xarray.Dataset object.

The read_in_s3() function will take a url that points to a zarr data cube stored in an AWS S3 bucket and return an xarray dataset.

I started with chunk_size='auto' which will choose chunk sizes that match the underlying data structure (this is generally ideal). More about choosing good chunk sizes here. If you want to use a different chunk size, specify it when you call the read_in_s3() function.

url = itslivetools.find_granule_by_point([95.180191, 30.645973])

url

'http://its-live-data.s3.amazonaws.com/datacubes/v2-updated-october2024/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.zarr'

dc = itslivetools.read_in_s3(url)

dc

<xarray.Dataset> Size: 1TB

Dimensions: (mid_date: 47892, y: 833, x: 833)

Coordinates:

* mid_date (mid_date) datetime64[ns] 383kB 2022-06-07T04...

* x (x) float64 7kB 7.001e+05 7.003e+05 ... 8e+05

* y (y) float64 7kB 3.4e+06 3.4e+06 ... 3.3e+06

Data variables: (12/60)

M11 (mid_date, y, x) float32 133GB dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

M11_dr_to_vr_factor (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

M12 (mid_date, y, x) float32 133GB dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

M12_dr_to_vr_factor (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

acquisition_date_img1 (mid_date) datetime64[ns] 383kB dask.array<chunksize=(47892,), meta=np.ndarray>

acquisition_date_img2 (mid_date) datetime64[ns] 383kB dask.array<chunksize=(47892,), meta=np.ndarray>

... ...

vy_error_modeled (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_error_slow (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_error_stationary (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_stable_shift (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_stable_shift_slow (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_stable_shift_stationary (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

Attributes: (12/19)

Conventions: CF-1.8

GDAL_AREA_OR_POINT: Area

author: ITS_LIVE, a NASA MEaSUREs project (its-live.j...

autoRIFT_parameter_file: http://its-live-data.s3.amazonaws.com/autorif...

datacube_software_version: 1.0

date_created: 25-Sep-2023 22:00:23

... ...

s3: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

skipped_granules: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

time_standard_img1: UTC

time_standard_img2: UTC

title: ITS_LIVE datacube of image pair velocities

url: https://its-live-data.s3.amazonaws.com/datacu...- mid_date: 47892

- y: 833

- x: 833

- mid_date(mid_date)datetime64[ns]2022-06-07T04:21:44.211208960 .....

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

array(['2022-06-07T04:21:44.211208960', '2018-04-14T04:18:49.171219968', '2017-02-10T16:15:50.660901120', ..., '2024-01-23T04:18:19.231119104', '2023-06-01T04:10:44.893907968', '2023-09-02T16:18:20.230413056'], shape=(47892,), dtype='datetime64[ns]') - x(x)float647.001e+05 7.003e+05 ... 8e+05

- description :

- x coordinate of projection

- standard_name :

- projection_x_coordinate

array([700132.5, 700252.5, 700372.5, ..., 799732.5, 799852.5, 799972.5], shape=(833,)) - y(y)float643.4e+06 3.4e+06 ... 3.3e+06 3.3e+06

- description :

- y coordinate of projection

- standard_name :

- projection_y_coordinate

array([3399907.5, 3399787.5, 3399667.5, ..., 3300307.5, 3300187.5, 3300067.5], shape=(833,))

- M11(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- conversion matrix element (1st row, 1st column) that can be multiplied with vx to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- grid_mapping :

- mapping

- standard_name :

- conversion_matrix_element_11

- units :

- pixel/(meter/year)

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - M11_dr_to_vr_factor(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M11_dr_to_vr_factor

- units :

- meter/(year*pixel)

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - M12(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- conversion matrix element (1st row, 2nd column) that can be multiplied with vy to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- grid_mapping :

- mapping

- standard_name :

- conversion_matrix_element_12

- units :

- pixel/(meter/year)

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - M12_dr_to_vr_factor(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M12_dr_to_vr_factor

- units :

- meter/(year*pixel)

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - acquisition_date_img1(mid_date)datetime64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- acquisition date and time of image 1

- standard_name :

- image1_acquition_date

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type datetime64[ns] numpy.ndarray - acquisition_date_img2(mid_date)datetime64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- acquisition date and time of image 2

- standard_name :

- image2_acquition_date

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type datetime64[ns] numpy.ndarray - autoRIFT_software_version(mid_date)<U5dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- version of autoRIFT software

- standard_name :

- autoRIFT_software_version

Array Chunk Bytes 0.91 MiB 0.91 MiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - chip_size_height(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- height of search template (chip)

- grid_mapping :

- mapping

- standard_name :

- chip_size_height

- units :

- m

- y_pixel_size :

- 10.0

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - chip_size_width(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- width of search template (chip)

- grid_mapping :

- mapping

- standard_name :

- chip_size_width

- units :

- m

- x_pixel_size :

- 10.0

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - date_center(mid_date)datetime64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- midpoint of image 1 and image 2 acquisition date

- standard_name :

- image_pair_center_date

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type datetime64[ns] numpy.ndarray - date_dt(mid_date)timedelta64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- time separation between acquisition of image 1 and image 2

- standard_name :

- image_pair_time_separation

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type timedelta64[ns] numpy.ndarray - floatingice(y, x)float32dask.array<chunksize=(833, 833), meta=np.ndarray>

- description :

- floating ice mask, 0 = non-floating-ice, 1 = floating-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- floating ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_floatingice.tif

Array Chunk Bytes 2.65 MiB 2.65 MiB Shape (833, 833) (833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - granule_url(mid_date)<U1024dask.array<chunksize=(32736,), meta=np.ndarray>

- description :

- original granule URL

- standard_name :

- granule_url

Array Chunk Bytes 187.08 MiB 127.88 MiB Shape (47892,) (32736,) Dask graph 2 chunks in 2 graph layers Data type - interp_mask(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- light interpolation mask

- flag_meanings :

- measured interpolated

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- interpolated_value_mask

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - landice(y, x)float32dask.array<chunksize=(833, 833), meta=np.ndarray>

- description :

- land ice mask, 0 = non-land-ice, 1 = land-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- land ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_landice.tif

Array Chunk Bytes 2.65 MiB 2.65 MiB Shape (833, 833) (833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - mapping()<U1...

- GeoTransform :

- 700072.5 120.0 0 3399967.5 0 -120.0

- crs_wkt :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- false_easting :

- 500000.0

- false_northing :

- 0.0

- grid_mapping_name :

- universal_transverse_mercator

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 0.0

- longitude_of_central_meridian :

- 93.0

- proj4text :

- +proj=utm +zone=46 +datum=WGS84 +units=m +no_defs

- scale_factor_at_central_meridian :

- 0.9996

- semi_major_axis :

- 6378137.0

- spatial_epsg :

- 32646

- spatial_ref :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- utm_zone_number :

- 46.0

[1 values with dtype=<U1]

- mission_img1(mid_date)<U1dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the mission that acquired image 1

- standard_name :

- image1_mission

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - mission_img2(mid_date)<U1dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the mission that acquired image 2

- standard_name :

- image2_mission

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - roi_valid_percentage(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- percentage of pixels with a valid velocity estimate determined for the intersection of the full image pair footprint and the region of interest (roi) that defines where autoRIFT tried to estimate a velocity

- standard_name :

- region_of_interest_valid_pixel_percentage

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - satellite_img1(mid_date)<U2dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the satellite that acquired image 1

- standard_name :

- image1_satellite

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - satellite_img2(mid_date)<U2dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the satellite that acquired image 2

- standard_name :

- image2_satellite

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - sensor_img1(mid_date)<U3dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the sensor that acquired image 1

- standard_name :

- image1_sensor

Array Chunk Bytes 561.23 kiB 561.23 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - sensor_img2(mid_date)<U3dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the sensor that acquired image 2

- standard_name :

- image2_sensor

Array Chunk Bytes 561.23 kiB 561.23 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - stable_count_slow(mid_date)uint16dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- number of valid pixels over slowest 25% of ice

- standard_name :

- stable_count_slow

- units :

- count

Array Chunk Bytes 93.54 kiB 93.54 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type uint16 numpy.ndarray - stable_count_stationary(mid_date)uint16dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- number of valid pixels over stationary or slow-flowing surfaces

- standard_name :

- stable_count_stationary

- units :

- count

Array Chunk Bytes 93.54 kiB 93.54 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type uint16 numpy.ndarray - stable_shift_flag(mid_date)uint8dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- flag for applying velocity bias correction: 0 = no correction; 1 = correction from overlapping stable surface mask (stationary or slow-flowing surfaces with velocity < 15 m/yr)(top priority); 2 = correction from slowest 25% of overlapping velocities (second priority)

- standard_name :

- stable_shift_flag

Array Chunk Bytes 46.77 kiB 46.77 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type uint8 numpy.ndarray - v(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- velocity magnitude

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_velocity

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - v_error(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- velocity magnitude error

- grid_mapping :

- mapping

- standard_name :

- velocity_error

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - va(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- velocity in radar azimuth direction

- grid_mapping :

- mapping

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- error for velocity in radar azimuth direction

- standard_name :

- va_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- va_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- va_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- va_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied va shift calibrated using pixels over stable or slow surfaces

- standard_name :

- va_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- va shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- va_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- va shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- va_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- velocity in radar range direction

- grid_mapping :

- mapping

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- error for velocity in radar range direction

- standard_name :

- vr_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vr_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vr_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vr_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied vr shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vr_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vr shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vr_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vr shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vr_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- velocity component in x direction

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_x_velocity

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- best estimate of x_velocity error: vx_error is populated according to the approach used for the velocity bias correction as indicated in "stable_shift_flag"

- standard_name :

- vx_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vx_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vx_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 meter/year identified from an external mask

- standard_name :

- vx_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied vx shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vx_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vx shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vx_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vx shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vx_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy(mid_date, y, x)float32dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

- description :

- velocity component in y direction

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_y_velocity

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- best estimate of y_velocity error: vy_error is populated according to the approach used for the velocity bias correction as indicated in "stable_shift_flag"

- standard_name :

- vy_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vy_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vy_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 meter/year identified from an external mask

- standard_name :

- vy_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied vy shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vy_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vy shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vy_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vy shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vy_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray

- mid_datePandasIndex

PandasIndex(DatetimeIndex(['2022-06-07 04:21:44.211208960', '2018-04-14 04:18:49.171219968', '2017-02-10 16:15:50.660901120', '2022-04-03 04:19:01.211214080', '2021-07-22 04:16:46.210427904', '2019-03-15 04:15:44.180925952', '2002-09-15 03:59:12.379172096', '2002-12-28 03:42:16.181281024', '2021-06-29 16:16:10.210323968', '2022-03-26 16:18:35.211123968', ... '2021-10-05 04:15:51.210407936', '2022-11-11 16:15:55.220602112', '2022-06-29 16:19:50.211113984', '2023-08-18 16:18:10.230522880', '2023-08-03 16:18:00.221108992', '2022-12-16 16:16:00.220919808', '2023-02-12 04:15:56.220721920', '2024-01-23 04:18:19.231119104', '2023-06-01 04:10:44.893907968', '2023-09-02 16:18:20.230413056'], dtype='datetime64[ns]', name='mid_date', length=47892, freq=None)) - xPandasIndex

PandasIndex(Index([700132.5, 700252.5, 700372.5, 700492.5, 700612.5, 700732.5, 700852.5, 700972.5, 701092.5, 701212.5, ... 798892.5, 799012.5, 799132.5, 799252.5, 799372.5, 799492.5, 799612.5, 799732.5, 799852.5, 799972.5], dtype='float64', name='x', length=833)) - yPandasIndex

PandasIndex(Index([3399907.5, 3399787.5, 3399667.5, 3399547.5, 3399427.5, 3399307.5, 3399187.5, 3399067.5, 3398947.5, 3398827.5, ... 3301147.5, 3301027.5, 3300907.5, 3300787.5, 3300667.5, 3300547.5, 3300427.5, 3300307.5, 3300187.5, 3300067.5], dtype='float64', name='y', length=833))

- Conventions :

- CF-1.8

- GDAL_AREA_OR_POINT :

- Area

- author :

- ITS_LIVE, a NASA MEaSUREs project (its-live.jpl.nasa.gov)

- autoRIFT_parameter_file :

- http://its-live-data.s3.amazonaws.com/autorift_parameters/v001/autorift_landice_0120m.shp

- datacube_software_version :

- 1.0

- date_created :

- 25-Sep-2023 22:00:23

- date_updated :

- 13-Nov-2024 00:08:07

- geo_polygon :

- [[95.06959008486952, 29.814255053135895], [95.32812062059084, 29.809951334550703], [95.58659184122865, 29.80514261876954], [95.84499718862224, 29.7998293459177], [96.10333011481168, 29.79401200205343], [96.11032804508507, 30.019297601073085], [96.11740568350054, 30.244573983323825], [96.12456379063154, 30.469841094022847], [96.1318031397002, 30.695098878594504], [95.87110827645229, 30.70112924501256], [95.61033817656023, 30.7066371044805], [95.34949964126946, 30.711621947056347], [95.08859948278467, 30.716083310981194], [95.08376623410525, 30.49063893600811], [95.07898726183609, 30.26518607254204], [95.0742620484426, 30.039724763743482], [95.06959008486952, 29.814255053135895]]

- institution :

- NASA Jet Propulsion Laboratory (JPL), California Institute of Technology

- latitude :

- 30.26

- longitude :

- 95.6

- proj_polygon :

- [[700000, 3300000], [725000.0, 3300000.0], [750000.0, 3300000.0], [775000.0, 3300000.0], [800000, 3300000], [800000.0, 3325000.0], [800000.0, 3350000.0], [800000.0, 3375000.0], [800000, 3400000], [775000.0, 3400000.0], [750000.0, 3400000.0], [725000.0, 3400000.0], [700000, 3400000], [700000.0, 3375000.0], [700000.0, 3350000.0], [700000.0, 3325000.0], [700000, 3300000]]

- projection :

- 32646

- s3 :

- s3://its-live-data/datacubes/v2/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.zarr

- skipped_granules :

- s3://its-live-data/datacubes/v2/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.json

- time_standard_img1 :

- UTC

- time_standard_img2 :

- UTC

- title :

- ITS_LIVE datacube of image pair velocities

- url :

- https://its-live-data.s3.amazonaws.com/datacubes/v2/N30E090/ITS_LIVE_vel_EPSG32646_G0120_X750000_Y3350000.zarr

Working with dask#

We can see that this is a very large dataset. We have a time dimension with a length of 25,243, and then x and y dimensions that are both 833 units long. There are 60 variables within this dataset that exist along some or all of the dimensions (with the exception of the mapping data variable which contains geographic information. If you expand one of the 3-dimensional variables (exists along x,y, and mid_date) you’ll see that it is very large (65 GB!). This is more than my computer can handle so we will use dask, which is a python package for parallelizing and scaling workflows that let’s us work with larger-than-memory data efficiently.

dc.v

<xarray.DataArray 'v' (mid_date: 47892, y: 833, x: 833)> Size: 133GB

dask.array<open_dataset-v, shape=(47892, 833, 833), dtype=float32, chunksize=(47892, 20, 20), chunktype=numpy.ndarray>

Coordinates:

* mid_date (mid_date) datetime64[ns] 383kB 2022-06-07T04:21:44.211208960 ....

* x (x) float64 7kB 7.001e+05 7.003e+05 7.004e+05 ... 7.999e+05 8e+05

* y (y) float64 7kB 3.4e+06 3.4e+06 3.4e+06 ... 3.3e+06 3.3e+06

Attributes:

description: velocity magnitude

grid_mapping: mapping

standard_name: land_ice_surface_velocity

units: meter/year- mid_date: 47892

- y: 833

- x: 833

- dask.array<chunksize=(47892, 20, 20), meta=np.ndarray>

Array Chunk Bytes 123.80 GiB 73.08 MiB Shape (47892, 833, 833) (47892, 20, 20) Dask graph 1764 chunks in 2 graph layers Data type float32 numpy.ndarray - mid_date(mid_date)datetime64[ns]2022-06-07T04:21:44.211208960 .....

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

array(['2022-06-07T04:21:44.211208960', '2018-04-14T04:18:49.171219968', '2017-02-10T16:15:50.660901120', ..., '2024-01-23T04:18:19.231119104', '2023-06-01T04:10:44.893907968', '2023-09-02T16:18:20.230413056'], shape=(47892,), dtype='datetime64[ns]') - x(x)float647.001e+05 7.003e+05 ... 8e+05

- description :

- x coordinate of projection

- standard_name :

- projection_x_coordinate

array([700132.5, 700252.5, 700372.5, ..., 799732.5, 799852.5, 799972.5], shape=(833,)) - y(y)float643.4e+06 3.4e+06 ... 3.3e+06 3.3e+06

- description :

- y coordinate of projection

- standard_name :

- projection_y_coordinate

array([3399907.5, 3399787.5, 3399667.5, ..., 3300307.5, 3300187.5, 3300067.5], shape=(833,))

- mid_datePandasIndex

PandasIndex(DatetimeIndex(['2022-06-07 04:21:44.211208960', '2018-04-14 04:18:49.171219968', '2017-02-10 16:15:50.660901120', '2022-04-03 04:19:01.211214080', '2021-07-22 04:16:46.210427904', '2019-03-15 04:15:44.180925952', '2002-09-15 03:59:12.379172096', '2002-12-28 03:42:16.181281024', '2021-06-29 16:16:10.210323968', '2022-03-26 16:18:35.211123968', ... '2021-10-05 04:15:51.210407936', '2022-11-11 16:15:55.220602112', '2022-06-29 16:19:50.211113984', '2023-08-18 16:18:10.230522880', '2023-08-03 16:18:00.221108992', '2022-12-16 16:16:00.220919808', '2023-02-12 04:15:56.220721920', '2024-01-23 04:18:19.231119104', '2023-06-01 04:10:44.893907968', '2023-09-02 16:18:20.230413056'], dtype='datetime64[ns]', name='mid_date', length=47892, freq=None)) - xPandasIndex

PandasIndex(Index([700132.5, 700252.5, 700372.5, 700492.5, 700612.5, 700732.5, 700852.5, 700972.5, 701092.5, 701212.5, ... 798892.5, 799012.5, 799132.5, 799252.5, 799372.5, 799492.5, 799612.5, 799732.5, 799852.5, 799972.5], dtype='float64', name='x', length=833)) - yPandasIndex

PandasIndex(Index([3399907.5, 3399787.5, 3399667.5, 3399547.5, 3399427.5, 3399307.5, 3399187.5, 3399067.5, 3398947.5, 3398827.5, ... 3301147.5, 3301027.5, 3300907.5, 3300787.5, 3300667.5, 3300547.5, 3300427.5, 3300307.5, 3300187.5, 3300067.5], dtype='float64', name='y', length=833))

- description :

- velocity magnitude

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_velocity

- units :

- meter/year

We are reading this in as a dask array. Let’s take a look at the chunk sizes:

Note

chunksizes shows the largest chunk size. chunks shows the sizes of all chunks along all dims, better if you have irregular chunks

dc.chunksizes

ValueError: Object has inconsistent chunks along dimension y. This can be fixed by calling unify_chunks().

As suggested in the error message, apply the unify_chunks() method:

dc = dc.unify_chunks()

Great, that worked. Now we can see the chunk sizes of the dataset:

dc.chunksizes

Frozen({'mid_date': (32736, 15156), 'y': (20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 13), 'x': (20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 13)})

Note

Setting the dask chunksize to auto at the xr.open_dataset() step will use chunk sizes that most closely resemble the structure of the underlying data. To avoid imposing a chunk size that isn’t a good fit for the data, avoid re-chunking until we have selected a subset of our area of interest from the larger dataset

Check CRS of xr object:

dc.attrs['projection']

'32646'

Let’s take a look at the time dimension (mid_date here). To start with we’ll just print the first 10 values:

for element in range(10):

print(dc.mid_date[element].data)

2022-06-07T04:21:44.211208960

2018-04-14T04:18:49.171219968

2017-02-10T16:15:50.660901120

2022-04-03T04:19:01.211214080

2021-07-22T04:16:46.210427904

2019-03-15T04:15:44.180925952

2002-09-15T03:59:12.379172096

2002-12-28T03:42:16.181281024

2021-06-29T16:16:10.210323968

2022-03-26T16:18:35.211123968

It doesn’t look like the time dimension is in chronological order, let’s fix that:

Note

The following cell is commented out because it produces an error and usually leads to a dead kernel. Let’s try to troubleshoot this below. {add image of error (in screenshots)}

dc_timesorted = dc.sortby(dc['mid_date'])

Note: work-around for situation where above cell produces error#

Note

In some test cases, the above cell triggered the following error. While the current version runs fine, I’m including a work around in the following cells in case you encounter this error as well. If you don’t, feel free to skip ahead to the next sub-section heading{add image of error (in screenshots)}

Let’s follow some internet advice and try to fix this issue. We will actually start over from the very beginning and read in the dataset using only xarray and not dask.

dc_new = xr.open_dataset(url, engine='zarr')

Now we have an xr.Dataset that is built on numpy arrays rather than dask arrays, which means we can re-index along the time dimension using xarray’s ‘lazy’ functionality:

dc_new_timesorted = dc_new.sortby(dc_new['mid_date'])

for element in range(10):

print(dc_new_timesorted.mid_date[element].data)

1986-09-11T03:31:15.003252992

1986-10-05T03:31:06.144750016

1986-10-21T03:31:34.493249984

1986-11-22T03:29:27.023556992

1986-11-30T03:29:08.710132992

1986-12-08T03:29:55.372057024

1986-12-08T03:33:17.095283968

1986-12-16T03:30:10.645544000

1986-12-24T03:29:52.332120960

1987-01-09T03:30:01.787228992

Great, much easier. Now, we will chunk the dataset.

dc_new_timesorted = dc_new_timesorted.chunk()

dc_new_timesorted.chunks

Frozen({'mid_date': (47892,), 'y': (833,), 'x': (833,)})

dc_new_timesorted

<xarray.Dataset> Size: 1TB

Dimensions: (mid_date: 47892, y: 833, x: 833)

Coordinates:

* mid_date (mid_date) datetime64[ns] 383kB 1986-09-11T03...

* x (x) float64 7kB 7.001e+05 7.003e+05 ... 8e+05

* y (y) float64 7kB 3.4e+06 3.4e+06 ... 3.3e+06

Data variables: (12/60)

M11 (mid_date, y, x) float32 133GB dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

M11_dr_to_vr_factor (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

M12 (mid_date, y, x) float32 133GB dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

M12_dr_to_vr_factor (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

acquisition_date_img1 (mid_date) datetime64[ns] 383kB dask.array<chunksize=(47892,), meta=np.ndarray>

acquisition_date_img2 (mid_date) datetime64[ns] 383kB dask.array<chunksize=(47892,), meta=np.ndarray>

... ...

vy_error_modeled (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_error_slow (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_error_stationary (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_stable_shift (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_stable_shift_slow (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

vy_stable_shift_stationary (mid_date) float32 192kB dask.array<chunksize=(47892,), meta=np.ndarray>

Attributes: (12/19)

Conventions: CF-1.8

GDAL_AREA_OR_POINT: Area

author: ITS_LIVE, a NASA MEaSUREs project (its-live.j...

autoRIFT_parameter_file: http://its-live-data.s3.amazonaws.com/autorif...

datacube_software_version: 1.0

date_created: 25-Sep-2023 22:00:23

... ...

s3: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

skipped_granules: s3://its-live-data/datacubes/v2/N30E090/ITS_L...

time_standard_img1: UTC

time_standard_img2: UTC

title: ITS_LIVE datacube of image pair velocities

url: https://its-live-data.s3.amazonaws.com/datacu...- mid_date: 47892

- y: 833

- x: 833

- mid_date(mid_date)datetime64[ns]1986-09-11T03:31:15.003252992 .....

- description :

- midpoint of image 1 and image 2 acquisition date and time with granule's centroid longitude and latitude as microseconds

- standard_name :

- image_pair_center_date_with_time_separation

array(['1986-09-11T03:31:15.003252992', '1986-10-05T03:31:06.144750016', '1986-10-21T03:31:34.493249984', ..., '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000', '2024-10-29T04:18:09.241024000'], shape=(47892,), dtype='datetime64[ns]') - x(x)float647.001e+05 7.003e+05 ... 8e+05

- description :

- x coordinate of projection

- standard_name :

- projection_x_coordinate

array([700132.5, 700252.5, 700372.5, ..., 799732.5, 799852.5, 799972.5], shape=(833,)) - y(y)float643.4e+06 3.4e+06 ... 3.3e+06 3.3e+06

- description :

- y coordinate of projection

- standard_name :

- projection_y_coordinate

array([3399907.5, 3399787.5, 3399667.5, ..., 3300307.5, 3300187.5, 3300067.5], shape=(833,))

- M11(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- conversion matrix element (1st row, 1st column) that can be multiplied with vx to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- grid_mapping :

- mapping

- standard_name :

- conversion_matrix_element_11

- units :

- pixel/(meter/year)

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - M11_dr_to_vr_factor(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M11_dr_to_vr_factor

- units :

- meter/(year*pixel)

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - M12(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- conversion matrix element (1st row, 2nd column) that can be multiplied with vy to give range pixel displacement dr (see Eq. A18 in https://www.mdpi.com/2072-4292/13/4/749)

- grid_mapping :

- mapping

- standard_name :

- conversion_matrix_element_12

- units :

- pixel/(meter/year)

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - M12_dr_to_vr_factor(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- multiplicative factor that converts slant range pixel displacement dr to slant range velocity vr

- standard_name :

- M12_dr_to_vr_factor

- units :

- meter/(year*pixel)

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - acquisition_date_img1(mid_date)datetime64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- acquisition date and time of image 1

- standard_name :

- image1_acquition_date

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type datetime64[ns] numpy.ndarray - acquisition_date_img2(mid_date)datetime64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- acquisition date and time of image 2

- standard_name :

- image2_acquition_date

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type datetime64[ns] numpy.ndarray - autoRIFT_software_version(mid_date)<U5dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- version of autoRIFT software

- standard_name :

- autoRIFT_software_version

Array Chunk Bytes 0.91 MiB 0.91 MiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - chip_size_height(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- height of search template (chip)

- grid_mapping :

- mapping

- standard_name :

- chip_size_height

- units :

- m

- y_pixel_size :

- 10.0

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - chip_size_width(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- chip_size_coordinates :

- Optical data: chip_size_coordinates = 'image projection geometry: width = x, height = y'. Radar data: chip_size_coordinates = 'radar geometry: width = range, height = azimuth'

- description :

- width of search template (chip)

- grid_mapping :

- mapping

- standard_name :

- chip_size_width

- units :

- m

- x_pixel_size :

- 10.0

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - date_center(mid_date)datetime64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- midpoint of image 1 and image 2 acquisition date

- standard_name :

- image_pair_center_date

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type datetime64[ns] numpy.ndarray - date_dt(mid_date)timedelta64[ns]dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- time separation between acquisition of image 1 and image 2

- standard_name :

- image_pair_time_separation

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type timedelta64[ns] numpy.ndarray - floatingice(y, x)float32dask.array<chunksize=(833, 833), meta=np.ndarray>

- description :

- floating ice mask, 0 = non-floating-ice, 1 = floating-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- floating ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_floatingice.tif

Array Chunk Bytes 2.65 MiB 2.65 MiB Shape (833, 833) (833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - granule_url(mid_date)<U1024dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- original granule URL

- standard_name :

- granule_url

Array Chunk Bytes 187.08 MiB 187.08 MiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - interp_mask(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- light interpolation mask

- flag_meanings :

- measured interpolated

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- interpolated_value_mask

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - landice(y, x)float32dask.array<chunksize=(833, 833), meta=np.ndarray>

- description :

- land ice mask, 0 = non-land-ice, 1 = land-ice

- flag_meanings :

- non-ice ice

- flag_values :

- [0, 1]

- grid_mapping :

- mapping

- standard_name :

- land ice mask

- url :

- https://its-live-data.s3.amazonaws.com/autorift_parameters/v001/N46_0120m_landice.tif

Array Chunk Bytes 2.65 MiB 2.65 MiB Shape (833, 833) (833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - mapping()<U1...

- GeoTransform :

- 700072.5 120.0 0 3399967.5 0 -120.0

- crs_wkt :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- false_easting :

- 500000.0

- false_northing :

- 0.0

- grid_mapping_name :

- universal_transverse_mercator

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 0.0

- longitude_of_central_meridian :

- 93.0

- proj4text :

- +proj=utm +zone=46 +datum=WGS84 +units=m +no_defs

- scale_factor_at_central_meridian :

- 0.9996

- semi_major_axis :

- 6378137.0

- spatial_epsg :

- 32646

- spatial_ref :

- PROJCS["WGS 84 / UTM zone 46N",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",93],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32646"]]

- utm_zone_number :

- 46.0

[1 values with dtype=<U1]

- mission_img1(mid_date)<U1dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the mission that acquired image 1

- standard_name :

- image1_mission

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - mission_img2(mid_date)<U1dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the mission that acquired image 2

- standard_name :

- image2_mission

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - roi_valid_percentage(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- percentage of pixels with a valid velocity estimate determined for the intersection of the full image pair footprint and the region of interest (roi) that defines where autoRIFT tried to estimate a velocity

- standard_name :

- region_of_interest_valid_pixel_percentage

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - satellite_img1(mid_date)<U2dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the satellite that acquired image 1

- standard_name :

- image1_satellite

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - satellite_img2(mid_date)<U2dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the satellite that acquired image 2

- standard_name :

- image2_satellite

Array Chunk Bytes 374.16 kiB 374.16 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - sensor_img1(mid_date)<U3dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the sensor that acquired image 1

- standard_name :

- image1_sensor

Array Chunk Bytes 561.23 kiB 561.23 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - sensor_img2(mid_date)<U3dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- id of the sensor that acquired image 2

- standard_name :

- image2_sensor

Array Chunk Bytes 561.23 kiB 561.23 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type - stable_count_slow(mid_date)uint16dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- number of valid pixels over slowest 25% of ice

- standard_name :

- stable_count_slow

- units :

- count

Array Chunk Bytes 93.54 kiB 93.54 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type uint16 numpy.ndarray - stable_count_stationary(mid_date)uint16dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- number of valid pixels over stationary or slow-flowing surfaces

- standard_name :

- stable_count_stationary

- units :

- count

Array Chunk Bytes 93.54 kiB 93.54 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type uint16 numpy.ndarray - stable_shift_flag(mid_date)uint8dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- flag for applying velocity bias correction: 0 = no correction; 1 = correction from overlapping stable surface mask (stationary or slow-flowing surfaces with velocity < 15 m/yr)(top priority); 2 = correction from slowest 25% of overlapping velocities (second priority)

- standard_name :

- stable_shift_flag

Array Chunk Bytes 46.77 kiB 46.77 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type uint8 numpy.ndarray - v(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- velocity magnitude

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_velocity

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - v_error(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- velocity magnitude error

- grid_mapping :

- mapping

- standard_name :

- velocity_error

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- velocity in radar azimuth direction

- grid_mapping :

- mapping

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- error for velocity in radar azimuth direction

- standard_name :

- va_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- va_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- va_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- va_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied va shift calibrated using pixels over stable or slow surfaces

- standard_name :

- va_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- va shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- va_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - va_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- va shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- va_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- velocity in radar range direction

- grid_mapping :

- mapping

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- error for velocity in radar range direction

- standard_name :

- vr_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vr_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vr_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vr_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied vr shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vr_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vr shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vr_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vr_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vr shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vr_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- velocity component in x direction

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_x_velocity

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- best estimate of x_velocity error: vx_error is populated according to the approach used for the velocity bias correction as indicated in "stable_shift_flag"

- standard_name :

- vx_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vx_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vx_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 meter/year identified from an external mask

- standard_name :

- vx_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied vx shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vx_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vx shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vx_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vx_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vx shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vx_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy(mid_date, y, x)float32dask.array<chunksize=(47892, 833, 833), meta=np.ndarray>

- description :

- velocity component in y direction

- grid_mapping :

- mapping

- standard_name :

- land_ice_surface_y_velocity

- units :

- meter/year

Array Chunk Bytes 123.80 GiB 123.80 GiB Shape (47892, 833, 833) (47892, 833, 833) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- best estimate of y_velocity error: vy_error is populated according to the approach used for the velocity bias correction as indicated in "stable_shift_flag"

- standard_name :

- vy_error

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error_modeled(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- 1-sigma error calculated using a modeled error-dt relationship

- standard_name :

- vy_error_modeled

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over slowest 25% of retrieved velocities

- standard_name :

- vy_error_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_error_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- RMSE over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 meter/year identified from an external mask

- standard_name :

- vy_error_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_stable_shift(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- applied vy shift calibrated using pixels over stable or slow surfaces

- standard_name :

- vy_stable_shift

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_stable_shift_slow(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vy shift calibrated using valid pixels over slowest 25% of retrieved velocities

- standard_name :

- vy_stable_shift_slow

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray - vy_stable_shift_stationary(mid_date)float32dask.array<chunksize=(47892,), meta=np.ndarray>

- description :

- vy shift calibrated using valid pixels over stable surfaces, stationary or slow-flowing surfaces with velocity < 15 m/yr identified from an external mask

- standard_name :

- vy_stable_shift_stationary

- units :

- meter/year

Array Chunk Bytes 187.08 kiB 187.08 kiB Shape (47892,) (47892,) Dask graph 1 chunks in 2 graph layers Data type float32 numpy.ndarray

- mid_datePandasIndex

PandasIndex(DatetimeIndex(['1986-09-11 03:31:15.003252992', '1986-10-05 03:31:06.144750016', '1986-10-21 03:31:34.493249984', '1986-11-22 03:29:27.023556992', '1986-11-30 03:29:08.710132992', '1986-12-08 03:29:55.372057024', '1986-12-08 03:33:17.095283968', '1986-12-16 03:30:10.645544', '1986-12-24 03:29:52.332120960', '1987-01-09 03:30:01.787228992', ... '2024-10-21 16:17:50.241008896', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-24 04:17:34.241014016', '2024-10-25 04:10:35.189837056', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024', '2024-10-29 04:18:09.241024'], dtype='datetime64[ns]', name='mid_date', length=47892, freq=None)) - xPandasIndex

PandasIndex(Index([700132.5, 700252.5, 700372.5, 700492.5, 700612.5, 700732.5, 700852.5, 700972.5, 701092.5, 701212.5, ... 798892.5, 799012.5, 799132.5, 799252.5, 799372.5, 799492.5, 799612.5, 799732.5, 799852.5, 799972.5], dtype='float64', name='x', length=833)) - yPandasIndex

PandasIndex(Index([3399907.5, 3399787.5, 3399667.5, 3399547.5, 3399427.5, 3399307.5, 3399187.5, 3399067.5, 3398947.5, 3398827.5, ... 3301147.5, 3301027.5, 3300907.5, 3300787.5, 3300667.5, 3300547.5, 3300427.5, 3300307.5, 3300187.5, 3300067.5], dtype='float64', name='y', length=833))

- Conventions :

- CF-1.8

- GDAL_AREA_OR_POINT :

- Area

- author :

- ITS_LIVE, a NASA MEaSUREs project (its-live.jpl.nasa.gov)

- autoRIFT_parameter_file :

- http://its-live-data.s3.amazonaws.com/autorift_parameters/v001/autorift_landice_0120m.shp

- datacube_software_version :

- 1.0

- date_created :

- 25-Sep-2023 22:00:23

- date_updated :

- 13-Nov-2024 00:08:07

- geo_polygon :

- [[95.06959008486952, 29.814255053135895], [95.32812062059084, 29.809951334550703], [95.58659184122865, 29.80514261876954], [95.84499718862224, 29.7998293459177], [96.10333011481168, 29.79401200205343], [96.11032804508507, 30.019297601073085], [96.11740568350054, 30.244573983323825], [96.12456379063154, 30.469841094022847], [96.1318031397002, 30.695098878594504], [95.87110827645229, 30.70112924501256], [95.61033817656023, 30.7066371044805], [95.34949964126946, 30.711621947056347], [95.08859948278467, 30.716083310981194], [95.08376623410525, 30.49063893600811], [95.07898726183609, 30.26518607254204], [95.0742620484426, 30.039724763743482], [95.06959008486952, 29.814255053135895]]

- institution :

- NASA Jet Propulsion Laboratory (JPL), California Institute of Technology

- latitude :

- 30.26

- longitude :

- 95.6

- proj_polygon :

- [[700000, 3300000], [725000.0, 3300000.0], [750000.0, 3300000.0], [775000.0, 3300000.0], [800000, 3300000], [800000.0, 3325000.0], [800000.0, 3350000.0], [800000.0, 3375000.0], [800000, 3400000], [775000.0, 3400000.0], [750000.0, 3400000.0], [725000.0, 3400000.0], [700000, 3400000], [700000.0, 3375000.0], [700000.0, 3350000.0], [700000.0, 3325000.0], [700000, 3300000]]

- projection :

- 32646

- s3 :